Topic 2: Exam Set B

An application needs 10GB of RAID 1 for log files, 20GB of RAID 5 for data files, and 20GB of RAID 5 for the operating system. All disks will be 10GB in capacity. Which of the following is the MINIMUM number of disks needed for this application?

A. 6

B. 7

C. 8

D. 9

Explanation:

Let's calculate the disk requirements for each component:

RAID 1 for Log Files (10GB):

RAID 1 is mirroring - requires 2 disks minimum

2 × 10GB disks = 10GB usable space (perfect match)

Disks needed: 2

RAID 5 Arrays (20GB each):

RAID 5 requires minimum 3 disks and provides (n-1) × disk capacity

For EACH 20GB RAID 5 array: 3 × 10GB disks = 20GB usable

Disks needed: 3 per array

Initial Calculation: 2 (RAID 1) + 3 (Data) + 3 (OS) = 8 disks

Optimized Solution:

We can combine the two 20GB RAID 5 requirements into one larger array:

Total needed for data + OS: 40GB

RAID 5 with 5 disks: 5 × 10GB = 50GB raw, 40GB usable (after parity)

Disks needed: 5

Final Optimized Total: 2 (RAID 1) + 5 (combined RAID 5) = 7 disks

Why Other Options Are Incorrect:

A. 6 Disks

Total usable space needed is 50GB (10GB + 20GB + 20GB).

With 6 disks, the maximum usable space in a single RAID 5 is 50GB (60GB raw - 10GB parity).

The Fatal Flaw: This 50GB array cannot also provide a separate, redundantly mirrored 10GB volume for the logs. The RAID 1 requirement forces a dedicated mirror, which a single RAID 5 cannot fulfill. You would need to carve the RAID 1 out of the RAID 5, which destroys the mirroring for the logs.

C. 8 Disks

This configuration works but is not the minimum. It uses separate RAID 5 arrays:

RAID 1 Logs: 2 disks

RAID 5 Data: 3 disks

RAID 5 OS: 3 disks

Total: 8 disks

This wastes one disk by having duplicate parity overhead instead of combining the two 20GB requirements.

D. 9 Disks

This exceeds requirements and is inefficient. It might add unnecessary disks like hot spares or use oversized arrays, but fails the "minimum" requirement.

The key insight is combining the two RAID 5 requirements to reduce parity overhead.

A server administrator is racking new servers in a cabinet with multiple connections from the servers to power supplies and the network. Which of the following should the administrator recommend to the organization to best address this situation?

A. Rack balancing

B. Cable management

C. Blade enclosure

D. Rail kits

Explanation

When racking multiple servers with multiple network and power connections, the environment can quickly become cluttered and unorganized.

Proper cable management is essential for:

Reducing downtime and errors

Clear labeling and routing prevents accidental disconnections during maintenance or troubleshooting.

Improving airflow and cooling efficiency

Poorly managed cables can block airflow, causing servers to overheat.

Simplifying troubleshooting and upgrades

Organized cables make it easier to identify connections and replace or add equipment.

Best practices for cable management in a server rack:

Use cable ties, Velcro straps, and cable organizers to secure cables neatly.

Separate power and data cables to reduce electrical interference.

Use labeled patch panels for network connections.

Maintain consistent routing paths (front-to-back or top-to-bottom).

Reference:

1.1 – Server form factors, components, and connectivity

3.2 – Implement hardware installation and management best practices

Incorrect Options

A. Rack balancing

Rack balancing focuses on evenly distributing weight in the rack to prevent tipping or structural strain.

It does not address cable clutter or connection organization, which is the primary concern here.

C. Blade enclosure

Blade enclosures integrate multiple blade servers into a shared chassis with a midplane/backplane for power and networking.

While this reduces cabling per blade, it’s a hardware solution, not a cable management strategy for standard rack servers.

D. Rail kits

Rail kits allow servers to slide into racks for easier installation and removal.

Useful for maintenance, but they do not organize or manage cables.

Summary

When dealing with multiple server connections in a cabinet:

Implementing proper cable management ensures organized, accessible, and safe connections while maintaining airflow and minimizing downtime.

An administrator is alerted to a hardware failure in a mission-critical server. The alert states that two drives have failed. The administrator notes the drives are in different RAID 1 arrays, and both are hot-swappable. Which of the following steps will be the MOST efficient?

A. Replace one drive, wait for a rebuild, and replace the next drive.

B. Shut down the server and replace the drives.

C. Replace both failed drives at the same time.

D. Replace all the drives in both degraded arrays.

Explanation

The key facts in the scenario are:

Two drives have failed, but they are in different RAID 1 arrays.

The server is mission-critical (implying minimizing downtime is vital).

The drives are hot-swappable (meaning the replacement can be performed while the server is running).

The Most Efficient Choice (C)

Since the two failed drives belong to separate, independent RAID 1 arrays, replacing both simultaneously is the most efficient and safe action.

Safety:

Each RAID 1 array (which is a mirror) still has its good, functional mirror drive intact. The failure of the drive in Array A has no bearing on the status of Array B's good drive, and vice versa. There is no risk of losing data on a second array during the first array's rebuild process.

Efficiency:

Replacing both failed drives at the same time minimizes the administrator's physical labor and allows the hardware RAID controller to initiate the rebuild process for both arrays concurrently. This cuts the total time required for the system to return to a fully redundant state compared to waiting for one array to rebuild before starting the second.

Why the Other Options are Incorrect

A. Replace one drive, wait for a rebuild, and replace the next drive:

This is the standard, conservative best practice when dealing with a single degraded array (like a RAID 5/6) or when two drives have failed in the same RAID 10 group to ensure no further failures occur during the rebuild. However, since the failures are in separate, independent RAID 1 arrays, this approach is unnecessarily time-consuming and inefficient.

B. Shut down the server and replace the drives:

Since the drives are hot-swappable, shutting down a mission-critical server is a major, unnecessary disruption that increases downtime, making this the least efficient option.

D. Replace all the drives in both degraded arrays:

This is excessive and costly. Only the failed drives need to be replaced to restore redundancy. Replacing the still-working drives is a waste of time and money, especially if they have not reported any errors.

Which of the following environmental controls must be carefully researched so the control itself does not cause the destruction of the server equipment?

A. Humidity control system

B. Sensors

C. Fire suppression

D. Heating system

Explanation:

Fire suppression systems present a unique paradox in data center environmental controls: the solution meant to save the equipment could potentially destroy it if chosen incorrectly. This requires extremely careful research and selection.

Why Fire Suppression Carries This Specific Risk:

Water-Based Suppression: Traditional sprinkler systems, common in office buildings, will drench server racks. This causes catastrophic failure through:

Electrical Short Circuits: Immediate and widespread short-circuiting of live electrical components.

Corrosion: Residual moisture leads to long-term corrosion of motherboards, connectors, and circuitry, causing failures long after the fire is out.

Contamination: Minerals and impurities in the water can corrode and foul sensitive components.

Chemical/Gas Suppression (Clean Agent): While these are the standard for server rooms, they still require careful research:

Agent Safety: Older halon systems are banned due to ozone depletion. Newer agents must be researched for their safety for personnel and their effect on equipment.

Deployment Method: The system must displace oxygen sufficiently to suppress the fire without creating conditions that could harm anyone present.

Residue: Some chemical agents can leave a corrosive residue that damages components over time. The correct "clean agent" leaves no residue.

The key is that when a fire suppression system activates, it does so in an uncontrolled, emergency context. A faulty, poorly researched, or inappropriate system will cause widespread, instantaneous, and often total loss of the server equipment it was meant to protect.

Why the Other Options Are Less Likely to Cause Destruction:

A. Humidity Control System:

While critical, a failure in a humidity system typically causes gradual damage. Low humidity can lead to static discharge over time, and high humidity can cause slow corrosion. It does not pose the same risk of immediate, catastrophic destruction.

B. Sensors:

Sensors (for temperature, humidity, water detection, etc.) are passive monitoring devices. A faulty sensor might provide bad data and lead to an incorrect response from another system, but the sensor itself cannot actively destroy server equipment.

D. Heating System:

A failure of a heating system, such as a stuck valve causing overheating, could certainly damage servers. However, this damage would typically be gradual, giving monitoring systems and administrators time to detect the temperature rise and intervene. It lacks the sudden, irreversible, and total destructive potential of a wrongly deployed fire suppression system.

Conclusion:

The question highlights a control that, by its very function, could be the source of destruction. Only fire suppression fits this description, as its activation—while necessary in a fire emergency—must be meticulously planned to ensure the "cure" isn't worse than the "disease."

Reference:

This is a core principle of data center facility management. Best practices for data center fire suppression, including the use of pre-action sprinkler systems and clean agent gases, are covered in the CompTIA Server+ (SK0-005) objectives under domain 1.4 "Physical Environment."

A server technician arrives at a data center to troubleshoot a physical server that is not responding to remote management software. The technician discovers the servers in the data center are not connected to a KVM switch, and their out-of-band management cards have not been configured. Which of thefollowing should the technician do to access the server for troubleshooting purposes?

A. Connect the diagnostic card to the PCle connector.

B. Connect a console cable to the server NIC.

C. Connect to the server from a crash cart.

D. Connect the virtual administration console.

Explanation

When a server is not responding to remote management software and out-of-band management cards are unconfigured, the administrator cannot use:

KVM over IP

iLO/iDRAC/IMM remote consoles

Virtual administration tools

In this situation, the only reliable method to access the server is physically, using a crash cart.

A crash cart typically includes:

A monitor

Keyboard

Mouse

Sometimes a USB or VGA/HDMI adapter

This allows the technician to:

Log in directly to the server OS or BIOS/UEFI

Diagnose hardware or software issues

Configure the out-of-band management card for future remote access

This is the industry-standard method when remote management is unavailable.

Reference:

3.2 – Implement hardware installation and management best practices

3.3 – Troubleshoot hardware and connectivity issues

(Direct physical access is the fallback when remote management fails.)

Incorrect Options

A. Connect the diagnostic card to the PCIe connector

PCIe diagnostic cards are used for hardware testing, POST error codes, or signal monitoring.

They cannot replace physical access if the OS is unresponsive and no remote management is configured.

Useful after crash cart access, not before.

B. Connect a console cable to the server NIC

Console cables are used for network equipment (switches, routers) or for servers with serial console management.

If the server NIC has not been configured for console management or SSH access, this will not provide access.

D. Connect the virtual administration console

Virtual administration consoles rely on functional out-of-band management cards or network connectivity.

Since the management card is unconfigured, this option is not available.

Summary

If remote management is unavailable and the server cannot be accessed over the network:

The technician must physically access the server using a crash cart to troubleshoot, configure management interfaces, or perform repairs.

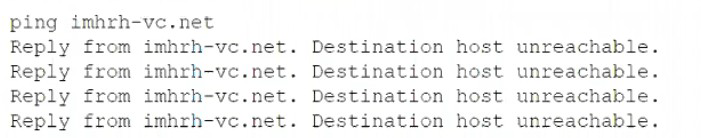

A server administrator receives the following output when trying to ping a local host:

Which of the following is MOST likely the issue?

A. Firewall

B. DHCP

C. DNS

D. VLAN

Explanation

When a local host pings another device and receives "Destination Host Unreachable," it means one of two things:

The local system (the one running the ping) determined there is no route to the destination.

An intermediate router sent an ICMP "Destination Unreachable" message back to the sender.

Since the devices are on the local network, a common cause for traffic to be blocked before it can establish a connection is a firewall 🛡️.

A software firewall on the destination host can be configured to drop ICMP echo requests (pings) without sending a "Host Unreachable" reply.

However, if the error is a "Reply From

In the context of the options provided, a firewall is a very common cause for a specific protocol (ICMP) to be blocked, leading to an unreachable status, even when other network functions (like ARP) might be working but are being intercepted or dropped by the security policy.

Why the Other Options are Less Likely

B. DHCP (Dynamic Host Configuration Protocol):

If DHCP had failed, the local host would likely have an APIPA address (e.g., 169.254.x.x) or no IP address at all, which would result in general network failure and possibly a "Network is unreachable" error, not the specific "Host Unreachable" message from a ping on the local network.

C. DNS (Domain Name System):

DNS is used for name resolution. If DNS were the issue, the command would fail to resolve the hostname and return "Unknown host" or "Name or service not known," not a successful lookup followed by a connectivity error. Since the server administrator is pinging a "local host," they may be using the IP address, which bypasses DNS entirely.

D. VLAN (Virtual Local Area Network):

A VLAN misconfiguration would typically manifest as the hosts being on different network segments without proper routing, which is a routing issue (a possible cause of "Host Unreachable"). However, a firewall is a more direct and easily isolated control that can specifically block the ICMP protocol used by ping, making it a more likely first point of failure to check.

An administrator discovers a Bash script file has the following permissions set in octal

notation;

777

Which of the following is the MOST appropriate command to ensure only the root user can

modify and execute the script?

A. chmod go-rw>:

B. chmod u=rwx

C. chmod u+wx

D. chmod g-rwx

Explanation:

The current permission of 777 in octal notation is a major security risk.

Let's break it down:

Octal 7 means: read (4) + write (2) + execute (1) = 7

777 means: User (owner) has read, write, execute; Group has read, write, execute; Others (everyone else) has read, write, execute

This means ANY user on the system can modify, read, and execute this script.

Why chmod go-rwx is Correct:

The command chmod go-rwx removes (-) all permissions (read, write, execute) for both the group (g) and others (o).

Resulting permissions: rwx------ (Octal 700)

User (root): read, write, execute

Group: no permissions

Others: no permissions

This ensures only the root user (the owner) can modify and execute the script, which is exactly what the question requires.

Why the Other Options Are Incorrect:

B. chmod u=rwx

This sets the user's permissions to read, write, execute, but does not change the existing group and others permissions.

Result: rwxrwxrwx (still 777) - no change to the security risk.

C. chmod u+wx

This adds (+) write and execute permissions for the user, but the user already has these permissions (from the 777).

Result: rwxrwxrwx (still 777) - no meaningful change.

D. chmod g-rwx

This only removes permissions from the group (g), but leaves others (o) with full permissions.

Result: rwx---rwx (Octal 707) - others can still modify and execute the script.

Conclusion:

Only option A (chmod go-rwx) comprehensively addresses the security issue by removing all permissions from both group and others, ensuring only the root user has access to modify and execute the script.

A server room contains ten physical servers that are running applications and a cluster of three dedicated hypervisors. The hypervisors are new and only have 10% utilization. The Chief Financial Officer has asked that the IT department do what it can to cut back on power consumption and maintenance costs in the data center. Which of the following would address the request with minimal server downtime?

A. Unplug the power cables from the redundant power supplies, leaving just the minimum required.

B. Convert the physical servers to the hypervisors and retire the ten servers.

C. Reimage the physical servers and retire all ten servers after the migration is complete.

D. Convert the ten servers to power-efficient core editions.

Explanation

The scenario describes:

Ten physical servers running applications

Three underutilized hypervisors (10% utilization)

A request to reduce power consumption and maintenance costs with minimal downtime

Server virtualization is the most effective solution because:

Consolidation:

Physical servers are migrated as virtual machines (VMs) onto the hypervisors.

Reduces the number of physical servers in the data center from 10 to 3 hypervisors, significantly cutting power usage, cooling requirements, and maintenance.

Minimal downtime:

Migration tools such as VMware vMotion or Hyper-V Live Migration allow VMs to be moved without shutting down the physical servers, satisfying the “minimal downtime” requirement.

Cost savings:

Fewer physical machines = lower electricity and maintenance costs

Less rack space is required

Reference:

2.1 – Given a scenario, implement server virtualization solutions

2.2 – Apply power and cooling best practices

(Virtualization is the industry-standard method to reduce power usage with minimal downtime.)

Incorrect Options

A. Unplug the power cables from redundant power supplies

Reducing power from redundant PSUs does not lower server count or utilization, so cost savings are minimal.

Risky: if one PSU fails, servers could lose power.

C. Reimage the physical servers and retire all ten servers after migration

Reimaging servers would take significant downtime.

Physical servers would need to be rebuilt, configured, and tested before decommissioning.

This is more disruptive than live migration to hypervisors.

D. Convert the ten servers to power-efficient core editions

Upgrading to “power-efficient cores” may marginally reduce power, but:

Servers still run physically

Maintenance costs remain

Savings are far lower than virtualization and consolidation

Summary

To reduce power consumption and maintenance costs while ensuring minimal downtime:

Migrate the workloads from the ten physical servers onto the underutilized hypervisors and retire the ten servers.

A server administrator wants to check the open ports on a server. Which of the following commands should the administrator use to complete the task?

A. nslookup

B. nbtstat

C. telnet

D. netstat -a

Explanation

To check open ports on a server, the correct command is netstat -a. This command displays all active TCP connections and the TCP and UDP ports on which the computer is listening.

Using netstat to Check Ports

The netstat command is the primary built-in tool for checking network connections and listening ports.

Here are common switches to refine your port check:

netstat -a: Shows all active connections and listening ports.

netstat -ab: Adds the b switch to display the executable name involved in creating each connection or listening port, helping you identify which application is using a port.

netstat -ano: The -o switch displays the Process ID (PID) for each connection. You can find the application based on the PID on the Processes tab in Windows Task Manager. The -n option shows addresses and port numbers numerically.

Alternative Methods

If you need to identify the application using a specific port, you can:

Use Task Manager: Run netstat -ano to get the PID, then open Windows Task Manager, go to the Details tab, and match the PID to find the process name.

Try the ss command on Linux: A modern replacement for netstat that is faster and provides similar information.

Why the Other Options Are Incorrect

A. nslookup:

This command is designed for Domain Name System (DNS) querying.

Its function is to resolve hostnames to IP addresses or vice versa.

It provides no information about which ports are open or listening on a server.

B. nbtstat:

This command displays NetBIOS over TCP/IP protocol statistics.

It's an older, specialized tool and is not a general utility for enumerating all open TCP and UDP ports on a modern server.

C. telnet:

This command is used to test connectivity to a single, specific port on a host.

For instance, you could use it to check if port 80 is open.

However, it cannot list all the ports that the server is currently listening on.

Which of the following commands should a systems administrator use to create a batch script to map multiple shares'?

A. nbtstat

B. netuse

C. tracert

D. netstst

Explanation

The net use command is the standard Windows command-line utility used to connect to, disconnect from, and view information about shared network resources, such as network drives or printers.

When placed inside a batch script (.bat or .cmd file), the administrator can automate the process of mapping multiple shares upon user login or system startup.

The basic syntax to map a share is:

$$\text{net use } [\text{DriveLetter}:] [\text{\\\ServerName\ShareName}] [\text{/persistent:yes|no}]$$

Example Batch Script for Multiple Shares

A batch script to map two different shares might look like this:

Code snippet

@echo off

REM Delete any existing mappings for drives H: and I:

net use H: /delete /y

net use I: /delete /y

REM Map the main 'Home' share to H: and make it persistent

net use H: \\FileServer01\HomeShares\%USERNAME% /persistent:yes

REM Map the 'Public' share to I:

net use I: \\FileServer01\PublicData /persistent:yes

Why Other Options Are Incorrect

A. nbtstat:

This command is used to display NetBIOS over TCP/IP protocol statistics.

It is used for network troubleshooting and provides no function for mapping network drives.

C. tracert:This command is used to trace the route (or path) that a packet takes from the source computer to a destination computer, primarily for network diagnostics.

It has no drive mapping function.

D. netstst:

This command is a typographical error (a misspelling of netstat), and is not a valid Windows command.

The correct command, netstat, is used to display active network connections and listening ports, not to map shares.

| Page 18 out of 50 Pages |