A security administrator needs to import Pll data records from the production environment to the test environment for testing purposes. Which of the following would best protect data confidentiality?

A. Data masking

B. Hashing

C. Watermarking

D. Encoding

Explanation:

Data masking is the best choice to protect confidentiality when using real PII (Personally Identifiable Information) in a test environment. It involves altering the data to make it unreadable or meaningless while preserving the structure and format needed for testing.

For example:

Real names → replaced with fake ones.

Credit card numbers → replaced with valid-looking but fake numbers.

Why the other options are incorrect:

Hashing: One-way transformation, good for integrity checks or storing passwords, not reversible or usable in testing.

Watermarking: Used for copyright protection or digital ownership, not for confidentiality.

Encoding: Converts data for transport (e.g., Base64), not secure and easily reversible.

Reference:

CompTIA CySA+ CS0-003 Objective 3.2 – "Apply security concepts appropriate to secure enterprise environments."

A Chief Information Security Officer has outlined several requirements for a new

vulnerability scanning project:

. Must use minimal network bandwidth

. Must use minimal host resources

. Must provide accurate, near real-time updates

. Must not have any stored credentials in configuration on the scanner

Which of the following vulnerability scanning methods should be used to best meet these

requirements?

A. Internal

B. Agent

C. Active

D. Uncredentialed

Why Agent Scanning Meets All Requirements:

Agent-based vulnerability scanning uses lightweight software installed directly on endpoints. It’s designed to:

Minimize network bandwidth: Agents collect data locally and send only essential updates, reducing traffic across segments.

Use minimal host resources: Modern agents are optimized to run with low CPU and memory impact.

Provide near real-time updates: Agents can continuously monitor and report changes without waiting for scheduled scans.

Avoid stored credentials on the scanner: Since agents operate locally, they don’t require centralized credential storage, eliminating a major security concern.

This method is especially effective in segmented networks, remote environments, or systems with limited connectivity.

Why the Other Options Fall Short:

Internal:Too vague — could refer to any scanning done inside the network, including active or credentialed scans. Doesn’t guarantee low bandwidth or resource usage.

Active: Sends probes across the network, consuming bandwidth and potentially impacting host performance. Often requires stored credentials for deeper scans.

Uncredentialed: Avoids credential storage, but lacks depth and accuracy. Also relies on network probing, which increases bandwidth usage and may miss internal vulnerabilities.

A security analyst needs to mitigate a known, exploited vulnerability related not tack vector that embeds software through the USB interface. Which of the following should the analyst do first?

A. Conduct security awareness training on the risks of using unknown and unencrypted USBs.

B. Write a removable media policy that explains that USBs cannot be connected to a company asset.

C. Check configurations to determine whether USB ports are enabled on company assets.

D. Review logs to see whether this exploitable vulnerability has already impacted the company.

Why this is the correct first move:

When dealing with a known, exploited USB-based vulnerability, the most immediate and effective action is to assess the current exposure. That starts with checking whether USB ports are enabled and accessible on company devices. If they are, the organization may be vulnerable to:

Malicious USB devices (e.g., BadUSB, Rubber Ducky)

Firmware-level exploits that don’t require user interaction

Data exfiltration or remote code execution via USB interfaces

By identifying which systems have active USB ports, the analyst can:

Prioritize which assets need hardening

Disable or restrict USB access where feasible

Apply endpoint protection or device control policies

This step lays the groundwork for targeted mitigation and helps prevent further exploitation.

Why the other options come later:

Security awareness training:Important for long-term prevention, but it doesn’t address the immediate technical exposure.

Removable media policy:A strong policy is essential, but it’s a follow-up to understanding current configurations.

Review logs:Useful for incident response, but it doesn’t prevent future exploitation if USB ports remain active.

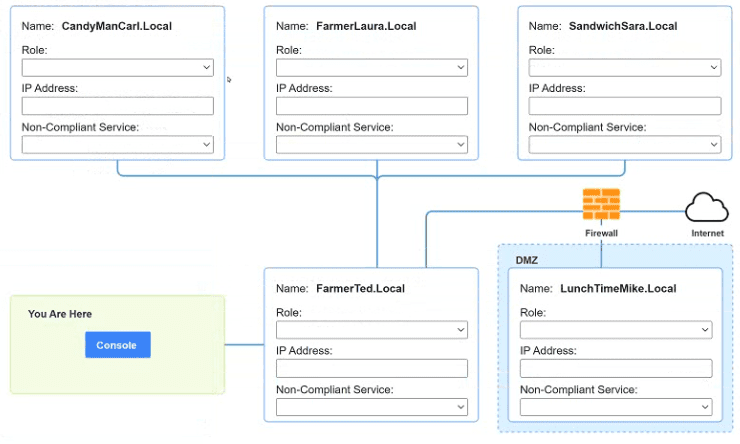

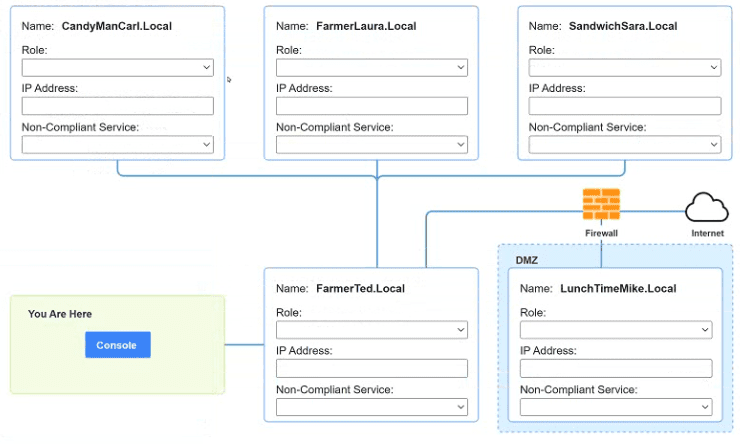

You are a penetration tester who is reviewing the system hardening guidelines for a

company. Hardening guidelines indicate the following.

There must be one primary server or service per device.

Only default port should be used

Non- secure protocols should be disabled.

The corporate internet presence should be placed in a protected subnet

Instructions :

Using the available tools, discover devices on the corporate network and the

services running on these devices.

You must determine

ip address of each device

The primary server or service each device

The protocols that should be disabled based on the hardening guidelines

1. Scan the Network for Devices

Use tools like:

Nmap: nmap -sP 192.168.1.0/24 to discover active hosts

Netdiscover or Advanced IP Scanner for quick device identification

This will help you identify:

IP addresses of devices

Hostnames (if available)

MAC addresses

2. Identify Services Running on Each Device

USE

nmap -sV

nmap -O

This helps determine:

The primary server or service per device

Whether only default ports are used

3. Detect Non-Secure Protocols

Look for:

Telnet (port 23), FTP (port 21), HTTP (port 80), IMAP (port 143), etc.

These should be flagged for disabling in favor of secure alternatives like SSH, SFTP, HTTPS, and IMAPS

4. Check Network Segmentation

Use traceroute or firewall rules to verify:

Whether the corporate internet-facing services are placed in a DMZ or protected subnet

The Chief Information Security Officer is directing a new program to reduce attack surface risks and threats as part of a zero trust approach. The IT security team is required to come up with priorities for the program. Which of the following is the best priority based on common attack frameworks?

A. Reduce the administrator and privileged access accounts

B. Employ a network-based IDS

C. Conduct thorough incident response

D. Enable SSO to enterprise applications

Explanation:

As part of a Zero Trust security model, the core principle is "never trust, always verify." This approach aims to minimize the attack surface by limiting unnecessary access and enforcing strict identity and access controls.

According to common attack frameworks like MITRE ATT&CK, attackers frequently target privileged and administrator accounts to escalate privileges, move laterally, and maintain persistence. Therefore:

Reducing the number of privileged accounts

Restricting their use

Enforcing least privilege

is the most effective way to reduce risks early in a Zero Trust program.

Why not the other options?

Employ a network-based IDS: Useful for detection, but reactive, not preventive. Also not a core Zero Trust priority.

Conduct thorough incident response: Important, but it's a post-incident process, not directly related to reducing the attack surface.

Enable SSO to enterprise applications: Good for usability, but can increase risk if not combined with strict access controls (especially if SSO tokens are compromised).

Reference:

NIST Zero Trust Architecture (SP 800-207): Emphasizes identity governance and least privilege access as primary controls.

Which of the following techniques can help a SOC team to reduce the number of alerts related to the internal security activities that the analysts have to triage?

A. Enrich the SIEM-ingested data to include all data required for triage.

B. Schedule a task to disable alerting when vulnerability scans are executing.

C. Filter all alarms in the SIEM with low severity.

D. Add a SOAR rule to drop irrelevant and duplicated notifications.

Explanation

The goal is to reduce alerts from internal security activities (e.g., vulnerability scans, internal testing) that burden SOC analysts with triage. This requires a solution that directly lowers alert volume, ideally through automation or targeted filtering, while preserving detection of real threats. The CS0-003 exam emphasizes Security Operations, including optimizing SIEM and SOAR tools to manage alerts efficiently. Each option is evaluated for its ability to reduce alerts from internal activities, considering practicality, specificity, and alignment with exam objectives like process improvement (Objective 1.5) and tool utilization

Correct Answer: Add a SOAR rule to drop irrelevant and duplicated notifications.

Adding a Security Orchestration, Automation, and Response (SOAR) rule to drop irrelevant and duplicated notifications is the most effective technique for reducing alerts from internal security activities. SOAR platforms, like Splunk SOAR or IBM Resilient, automate SOC workflows by executing playbooks that filter alerts based on predefined criteria, such as source IP, event type, or duplication. For example, a playbook can identify alerts from a known vulnerability scanner’s IP (e.g., Nessus) and suppress them, preventing analysts from triaging these routine events. It can also consolidate duplicated alerts, such as multiple logs for the same scan event, reducing volume further. This approach is dynamic, scalable, and aligns with CS0-003’s focus on automation (Objective 1.5). By targeting internal activities specifically, SOAR rules minimize alert fatigue without risking oversight of legitimate threats. Setup requires defining “irrelevant” alerts accurately, but once configured, it significantly lowers triage workload, making it ideal for modern SOCs.

Incorrect Answers

Enrich the SIEM-ingested data to include all data required for triage.

Enriching SIEM-ingested data involves adding contextual information, such as asset details, user identities, or threat intelligence, to logs within a Security Information and Event Management (SIEM) system like Splunk or QRadar. This helps analysts understand alerts faster by providing details, like identifying an alert’s source as an internal scanner. However, it does not reduce the number of alerts generated, as the SIEM still logs all events from internal activities, such as vulnerability scans. Instead, it improves triage efficiency by reducing investigation time, which doesn’t meet the question’s goal of lowering alert volume. While valuable for analysis (CS0-003 Objective 1.3), enrichment doesn’t address the root issue of excessive alerts from internal processes, making it less effective than automation-based solutions like SOAR rules.

Schedule a task to disable alerting when vulnerability scans are executing.

Scheduling a task to disable alerting during vulnerability scans reduces alerts by suppressing notifications from known internal activities, such as scans by tools like Nessus or Qualys. For instance, a SIEM rule could block alerts from a scanner’s IP during a 2 a.m.–4 a.m. scan window. This targets a common source of internal alerts, aligning with CS0-003 Objective 1.4 (tool utilization). However, it’s limited to predefined schedules and may miss unscheduled scans, penetration tests, or other internal activities generating alerts. Misconfiguration risks suppressing real threats, and it lacks the flexibility of SOAR rules, which can filter alerts dynamically based on multiple criteria. While effective for specific scenarios, it’s less comprehensive than the SOAR option.

Filter all alarms in the SIEM with low severity.

Filtering all low-severity alarms in a SIEM reduces alert volume by excluding events deemed low priority based on predefined severity levels. However, this approach is overly broad and not specific to internal security activities. Vulnerability scans or internal tests can generate medium- or high-severity alerts (e.g., for critical vulnerabilities), which wouldn’t be filtered, leaving many internal alerts for triage. Additionally, filtering low-severity alerts risks missing legitimate threats misclassified as low priority, compromising security. This option aligns loosely with CS0-003 Objective 1.2 (system architecture in security operations) but lacks precision. It doesn’t target internal activities effectively, making it less suitable than SOAR rules, which can filter based on context like source or event type.

References

CompTIA CySA+ (CS0-003) Exam Objectives: Domain 1 (Security Operations), Objectives 1.3 (Analyze indicators of potentially malicious activity), 1.4 (Utilize tools/techniques to identify malicious activity), and 1.5 (Recommend process improvements to security operations).

Additional Notes

SOAR in SOCs: SOAR platforms are increasingly critical for reducing alert fatigue, a key challenge addressed in CS0-003. Practice configuring SOAR playbooks in tools like Splunk SOAR for performance-based questions (PBQs).

SIEM Optimization: Understanding SIEM rule tuning and alert filtering is essential for Domain 1. The SOAR option leverages automation, a trend emphasized in the CS0-003 update.

Alert Fatigue: The exam tests solutions to manage high alert volumes, making automation and targeted filtering critical skills.

Which of the following describes how a CSIRT lead determines who should be communicated with and when during a security incident?

A. The lead should review what is documented in the incident response policy or plan

B. Management level members of the CSIRT should make that decision

C. The lead has the authority to decide who to communicate with at any time

D. Subject matter experts on the team should communicate with others within the specified area of expertise

Explanation:

The question focuses on how a Computer Security Incident Response Team (CSIRT) lead determines who to communicate with and when during a security incident, a critical aspect of the Incident Response and Management domain (20% weighting) in the CompTIA CySA+ (CS0-003) exam. Effective communication is essential for coordinating response efforts, ensuring stakeholder alignment, and complying with organizational policies. The correct approach should align with established incident response processes, emphasizing structure and adherence to organizational guidelines, as tested

Correct Answer:

The lead should review what is documented in the incident response policy or plan.

The CSIRT lead determines who to communicate with and when by reviewing the organization’s incident response policy or plan. This document outlines the communication protocols, including roles, responsibilities, and timelines for notifying stakeholders such as management, legal teams, employees, customers, or regulatory bodies. For example, the plan may specify notifying senior management within 24 hours of a confirmed breach or contacting law enforcement for criminal incidents. It ensures consistency, compliance with regulations (e.g., GDPR, HIPAA), and alignment with organizational priorities. By following the policy, the lead avoids ad-hoc decisions, reduces errors, and ensures all necessary parties are informed appropriately.

Incorrect Answers:

Management level members of the CSIRT should make that decision.

Assigning communication decisions to management-level CSIRT members is not ideal, as it bypasses the structured guidance provided by the incident response policy. While management may provide strategic oversight, the CSIRT lead is responsible for operational decisions, including communication, based on predefined protocols. Decentralizing this responsibility to management risks inconsistent messaging, delays, or misalignment with regulatory or organizational requirements. For instance, management may not be aware of technical details or legal obligations outlined in the policy.

The lead has the authority to decide who to communicate with at any time.

While the CSIRT lead has significant authority during an incident, deciding communication arbitrarily without referencing the incident response policy is risky. Ad-hoc decisions may lead to missed notifications, regulatory non-compliance, or inappropriate disclosures (e.g., sharing sensitive details prematurely). The incident response plan provides a structured framework, specifying who (e.g., legal, PR, regulators) and when (e.g., within 72 hours for GDPR) to communicate, ensuring consistency and accountability. This option ignores CS0-003 Objective 3.1, which stresses adherence to documented processes, and frameworks like NIST SP 800-61r2, which advocate for predefined communication plans to avoid errors during high-pressure incidents. Structured guidance is critical for effective incident management.

Subject matter experts on the team should communicate with others within the specified area of expertise.

Allowing subject matter experts (SMEs) to independently communicate within their areas of expertise fragments the incident response process. SMEs, such as forensic analysts or network engineers, focus on technical tasks, not strategic communication. Without centralized coordination by the CSIRT lead, guided by the incident response plan, SME communications could lead to inconsistent messaging, premature disclosures, or confusion among stakeholders. For example, an SME discussing a breach with external vendors without approval could violate confidentiality. This approach contradicts CS0-003 Objective 3.1 and NIST SP 800-61r2, which emphasize the CSIRT lead’s role in managing communications per the policy to ensure clarity, compliance, and alignment with organizational goals.

References

CompTIA CySA+ (CS0-003) Exam Objectives: Domain 3 (Incident Response and Management), Objective 3.1 (Explain the importance of incident response planning and processes).

Additional Notes

Incident Response Planning: CS0-003 emphasizes the importance of a well-defined incident response plan (Objective 3.1). Practice reviewing sample plans to understand communication workflows for performance-based questions (PBQs).

CSIRT Lead Role: The lead coordinates all aspects of incident response, including communication, ensuring alignment with organizational and regulatory requirements.

Communication Best Practices: Timely, accurate, and policy-driven communication prevents escalation of incidents and maintains stakeholder trust, a key focus in the exam.

During an incident, an analyst needs to acquire evidence for later investigation. Which of the following must be collected first in a computer system, related to its volatility level?

A. Disk contents

B. Backup data

C. Temporary files

D. Running processes

Explanation:

The question addresses a key aspect of the Incident Response and Management domain (20% weighting) in the CompTIA CySA+ (CS0-003) exam, specifically focusing on evidence collection during a security incident. The concept of volatility is critical in digital forensics, as it determines the order in which evidence should be collected to preserve data that is most likely to be lost or altered. The correct answer must prioritize the most volatile evidence, aligning with CS0-003 Objective 3.2 (Given a scenario, apply the appropriate incident response procedures), which includes forensic best practices like those outlined in NIST SP 800-86.

Correct Answer:

Running processes must be collected first due to their high volatility. These are active programs and services in a computer’s memory (RAM), which are lost upon system shutdown or reboot. In a security incident, capturing running processes (e.g., using tools like ps or tasklist in a forensic live image) provides critical evidence, such as identifying malicious processes, malware, or unauthorized applications. For example, a rogue process like a remote access trojan (RAT) could terminate or be overwritten if the system restarts, making immediate collection essential. The order of volatility, per NIST SP 800-86, prioritizes memory-based data (e.g., running processes) over less volatile data like disk contents. This ensures analysts preserve ephemeral evidence for later investigation, aligning with CS0-003’s focus on effective incident response procedures.

Incorrect Answers:

Disk contents:Disk contents, such as files on a hard drive or SSD, are less volatile than running processes because they persist even after a system reboot, unless deliberately modified. Collecting disk contents (e.g., via disk imaging with tools like FTK Imager) is crucial for forensic analysis, as it includes logs, configuration files, and potential malware. However, in the order of volatility, disk contents rank lower than memory-based data like running processes, as they are not immediately at risk of being lost. Per NIST SP 800-86, disk data should be collected after volatile memory data. While important for CS0-003 Objective 3.2, disk contents are not the first priority due to their relative stability.

Backup data: Backup data, stored on external drives, cloud services, or backup systems, is among the least volatile evidence, as it is designed to persist long-term and is typically isolated from the affected system. Collecting backups can provide historical context or clean system states for comparison during an investigation. However, backups are not time-sensitive in the same way as running processes, which reside in volatile memory and can be lost upon system shutdown. According to NIST SP 800-86, backup data is collected later in the forensic process, after volatile and primary storage data. For CS0-003, prioritizing backups over running processes would risk losing critical ephemeral evidence.

Temporary files: Temporary files, such as those in /tmp or Windows Temp folders, are more volatile than disk contents or backups but less volatile than running processes. These files, often created by applications or the operating system, may be overwritten or deleted during normal system operation or by malicious activity. While important for identifying artifacts like malware droppers, temporary files reside on disk, making them more persistent than memory-based running processes. NIST SP 800-86 places temporary files below memory data in the order of volatility. For CS0-003 Objective 3.2, collecting temporary files is necessary but secondary to capturing running processes, which are at greater risk of immediate loss.

References:

CompTIA CySA+ (CS0-003) Exam Objectives: Domain 3 (Incident Response and Management), Objective 3.2 (Given a scenario, apply the appropriate incident response procedures). Available at https://www.comptia.org.

Additional Notes:

Order of Volatility: Per NIST SP 800-86, the order of volatility for evidence collection is: (1) memory (e.g., running processes, RAM), (2) temporary files, (3) disk contents, (4) backups or archival data. CS0-003 tests this concept in PBQs and multiple-choice questions.

Forensic Tools: Practice using tools like Volatility (for memory analysis) or psaux/tasklist (for process capture) to prepare for incident response scenarios.

Incident Response Phases: Evidence collection occurs during the identification and containment phases, a key focus of CS0-003 Domain 3.

During a cybersecurity incident, one of the web servers at the perimeter network was affected by ransomware. Which of the following actions should be performed immediately?

A. Shut down the server

B. Reimage the server

C. Quarantine the server

D. Update the OS to latest version

Explanation

:

When a web server at the perimeter network is compromised by ransomware, the priority is to contain the threat to prevent further spread and preserve evidence for investigation. CompTIA’s CySA+ CS0-003 exam emphasizes containment as the first critical step in incident response. Immediate actions must be deliberate and strategic, not reactive or destructive.

Correct Answer:

Quarantine the server:

Quarantining the server is the most appropriate immediate action. This involves isolating the affected system from the network to prevent the ransomware from propagating to other systems or communicating with external command-and-control servers. Quarantine preserves the system’s current state, which is essential for forensic analysis and understanding the attack vector. According to CompTIA’s incident response methodology, containment is the first step after detection, and quarantine is a safe way to achieve it without destroying valuable evidence. It also allows analysts to assess the scope of the compromise before initiating recovery procedures.

Incorrect Answer:

Shut down the server:

While shutting down the server might seem like a quick way to stop the ransomware, it can lead to loss of volatile data such as running processes, memory contents, and active network connections. These are crucial for forensic investigation. CompTIA advises against abrupt shutdowns unless absolutely necessary, as they hinder the ability to analyze the incident thoroughly and may interfere with evidence preservation.

Reimage the server:

Reimaging the server is a recovery action, not an immediate containment step. It wipes the system clean and reinstalls the operating system, which eliminates the ransomware but also destroys all forensic evidence. CompTIA recommends reimaging only after containment and investigation are complete. Jumping to this step prematurely compromises the ability to understand how the attack occurred and whether other systems are affected.

Update the OS to latest version:

Updating the operating system is a preventive measure, not a response to an active incident. It does not remove ransomware or stop its execution. In fact, applying updates during an active compromise could cause instability or interfere with forensic tools. CompTIA includes patching as part of post-incident recovery and hardening—not as an immediate response to ransomware.

Reference:

CompTIA CySA+ CS0-003 Official Study Guide

CompTIA CySA+ CS0-003 Exam Objectives – Domain 3.3: Apply incident response procedures

CompTIA CertMaster Learn for CySA+ CS0-003

An analyst is reviewing a dashboard from the company's SIEM and finds that an IP address known to be malicious can be tracked to numerous high-priority events in the last two hours. The dashboard indicates that these events relate to TTPs. Which of the following is the analyst most likely using?

A. MITRE ATT&CK

B. OSSTMM

C. Diamond Model of Intrusion Analysis

D. OWASP

Correct Answer:

A. MITRE ATT&CK

:

The analyst is most likely using the MITRE ATT&CK framework, which is specifically designed to map observed adversary behaviors to a structured knowledge base of known Tactics, Techniques, and Procedures (TTPs). In this scenario, the SIEM dashboard highlights connections between a known malicious IP and high-priority events, correlating them with TTPs—a core feature of MITRE ATT&CK. This framework allows defenders to understand the adversary's behavior across the attack lifecycle, such as initial access, execution, privilege escalation, lateral movement, and more. Modern SIEM tools often integrate MITRE ATT&CK to provide real-time mapping of alerts to known adversary methods, making it easier for analysts to triage and respond efficiently. The emphasis on TTPs is a direct indicator of MITRE ATT&CK usage, as few other frameworks provide such detailed behavioral categorization. Additionally, many threat intelligence platforms and XDR/SIEM solutions display ATT&CK mappings within dashboards to enhance situational awareness during incidents.

Incorrect Answers:

B. OSSTMM (Open Source Security Testing Methodology Manual)

OSSTMM is a security testing and assessment methodology, not a threat behavior framework. It focuses on defining how security testing should be conducted across various domains such as wireless, telecommunications, physical access, and human interaction. It does not categorize attacker behaviors or TTPs and is not commonly integrated into SIEM dashboards. OSSTMM is used more for audits and structured security assessments rather than active incident detection and response. Therefore, an analyst dealing with TTPs in real time during an incident would not be using OSSTMM, making it the wrong fit for this scenario.

C. Diamond Model of Intrusion Analysis:

The Diamond Model is a conceptual framework used for understanding cyber intrusions by correlating four core features: adversary, infrastructure, capability, and victim. While it helps analysts understand relationships between these components, it does not use TTPs in the same structured way as MITRE ATT&CK. It’s primarily used for deeper analysis and attribution after an attack has occurred, not for real-time alert mapping or SIEM dashboard visualization. Although valuable in threat hunting and campaign analysis, it’s not the tool showing high-priority event correlations with TTPs as described in this question.

D. OWASP (Open Web Application Security Project):

OWASP is focused on web application security and maintains lists like the OWASP Top 10, which identifies the most common vulnerabilities in web applications (e.g., SQL injection, XSS, etc.). While useful for developers and application security testing, OWASP does not deal with threat actor TTPs or real-time adversarial behavior. It is not integrated into SIEM dashboards for mapping alerts to TTPs or adversary actions. Therefore, although valuable in a different context, OWASP is not applicable to the described scenario involving malicious IPs and behavioral mapping.

References:

CompTIA CySA+ CS0-003 Objectives

Domain 1.5: Summarize the use of frameworks, policies, and procedures related to cybersecurity operations

Domain 2.2: Analyze potential indicators associated with network attacks

| Page 9 out of 45 Pages |