An organization needs to bring in data collection and aggregation from various endpoints. Which of the following is the best tool to deploy to help analysts gather this data?

A. DLP

B. NAC

C. EDR

D. NIDS

Explanation:

The question asks for the best tool to deploy for data collection and aggregation from various endpoints to assist analysts, likely in a cybersecurity context for monitoring and analyzing security events. Among the options, EDR (Endpoint Detection and Response) is the most suitable, as it is specifically designed to collect, aggregate, and analyze data from endpoints (e.g., laptops, servers, mobile devices) to detect and respond to threats. This aligns with the CS0-003 exam’s Security Operations (Domain 1) and Incident Response and Management (Domain 3) objectives, which emphasize endpoint monitoring and data analysis for security operations.

Why C is Correct:

EDR Functionality: EDR solutions (e.g., CrowdStrike, SentinelOne, Microsoft Defender for Endpoint) deploy agents on endpoints to collect detailed telemetry, such as process execution, network connections, file changes, and user activity. This data is aggregated centrally for analysis, enabling analysts to detect threats, investigate incidents, and perform threat hunting.

Endpoint Focus: EDR is designed to gather data from various endpoints (Windows, macOS, Linux, mobile), making it ideal for comprehensive data collection across diverse systems.

Analyst Support: EDR platforms provide dashboards, alerts, and query tools to help analysts correlate events, identify patterns (e.g., malware, unauthorized access), and respond to incidents, directly supporting the question’s goal.

CS0-003 Alignment: The exam emphasizes endpoint security and incident response (Domains 1 and 3). EDR’s ability to collect and aggregate endpoint data supports these objectives, making it a key tool for SOC analysts.

Why Other Options Are Wrong:

A. DLP (Data Loss Prevention)

Reason: DLP tools focus on preventing unauthorized data exfiltration by monitoring and controlling data transfers (e.g., blocking sensitive data in emails or USB transfers). While DLP collects some endpoint data (e.g., file access logs), its primary role is enforcing data protection policies, not comprehensive data collection and aggregation for threat analysis. It’s less suited for general endpoint telemetry compared to EDR.

B. NAC (Network Access Control)

Reason: NAC solutions manage network access by enforcing policies (e.g., ensuring devices meet security requirements before connecting). They collect limited data (e.g., device compliance status, authentication logs) but focus on access control, not broad endpoint data collection or aggregation for analysts. NAC lacks the detailed telemetry and threat detection capabilities of EDR.

D. NIDS (Network Intrusion Detection System)

Reason: NIDS monitors network traffic for suspicious activity (e.g., malware signatures, anomalies) but operates at the network level, not on endpoints. It collects packet-level data, not endpoint-specific telemetry like processes or file changes. NIDS is less effective for aggregating endpoint data and doesn’t provide the granular visibility analysts need for endpoint-focused investigations.

Additional Context:

EDR Capabilities: EDR collects data like event logs, process trees, and network connections, aggregating them in a central console for analysis. It supports real-time monitoring, forensic investigations, and automated responses, making it ideal for SOC analysts.

Use Case: For example, an EDR tool could collect logs showing a suspicious process on 192.168.1.10, aggregate them with similar events across endpoints, and alert analysts to a potential ransomware attack.

CS0-003 Relevance: The exam tests tools for security operations and incident response, with EDR being a cornerstone for endpoint visibility and threat detection.

Reference:

CompTIA CySA+ (CS0-003) Exam Objectives, Domains 1 (Security Operations) and 3 (Incident Response and Management), www.comptia.org, covering endpoint monitoring and data collection tools.

CompTIA CySA+ Study Guide: Exam CS0-003 by Chapple and Seidl, discussing EDR as a key tool for endpoint data aggregation and analysis.

A systems administrator receives reports of an internet-accessible Linux server that is running very sluggishly. The administrator examines the server, sees a high amount of memory utilization, and suspects a DoS attack related to half-open TCP sessions consuming memory. Which of the following tools would best help to prove whether this server was experiencing this behavior?

A. Nmap

B. TCPDump

C. SIEM

D. EDR

Explanation:

The question asks for the best tool to prove whether an internet-accessible Linux server, running sluggishly with high memory utilization, is experiencing a Denial of Service (DoS) attack related to half-open TCP sessions consuming memory. Half-open TCP sessions, often caused by a SYN flood attack, occur when an attacker sends numerous SYN packets without completing the TCP handshake, exhausting server resources. TCPDump is the most effective tool for capturing and analyzing network traffic to confirm the presence of half-open TCP sessions, aligning with the CS0-003 exam’s Incident Response and Management (Domain 3) and Security Operations (Domain 1) objectives, which emphasize network traffic analysis and diagnosing attack behavior.

Why B is Correct:

TCPDump Functionality: TCPDump is a command-line packet capture tool for Linux that captures and analyzes network traffic in real time. It can display TCP packet details, including flags (e.g., SYN, ACK, RST), allowing the administrator to identify a high volume of SYN packets without corresponding ACKs, indicative of a SYN flood (half-open sessions).

Relevance to Half-Open Sessions: A SYN flood creates many half-open TCP connections, visible in TCPDump as repeated SYN packets from various source IPs to the server’s open ports (e.g., 80, 443) without SYN-ACK/ACK completion. For example, running tcpdump -i eth0 'tcp[13] & 2 != 0' filters for SYN packets (tcp[13] & 2 checks the SYN flag).

Memory Utilization Link: Half-open sessions consume memory in the server’s TCP stack (e.g., connection queue), causing sluggish performance. TCPDump can confirm this by showing excessive SYN packets, correlating with the observed symptoms.

CS0-003 Alignment: The exam tests skills in analyzing network traffic to diagnose attacks (Domain 3) and using tools like TCPDump for security operations (Domain 1).

Why Other Options Are Wrong.

A. Nmap

Reason: Nmap is a network scanning tool used to discover hosts, open ports, and services, not to analyze real-time traffic or diagnose ongoing attacks. While Nmap can perform a SYN scan (nmap -sS), it’s an active scanning tool, not a passive monitoring tool like TCPDump. It cannot capture or confirm half-open TCP sessions on the server, making it unsuitable for proving the DoS attack.

C. SIEM (Security Information and Event Management)

Reason: SIEM systems aggregate and analyze logs from multiple sources (e.g., servers, firewalls) to detect incidents but rely on log data, not raw packet captures. While a SIEM might show alerts for unusual traffic or server performance issues, it lacks the granularity to directly analyze TCP packet flags (e.g., SYN without ACK) to confirm half-open sessions. TCPDump provides more precise, real-time packet-level insight.

D. EDR (Endpoint Detection and Response)

Reason: EDR tools monitor endpoint activities (e.g., processes, file changes) and collect telemetry for threat detection but are not designed for detailed network traffic analysis. While EDR might detect secondary effects of a DoS (e.g., high resource usage), it cannot capture or analyze TCP packets to confirm half-open sessions, unlike TCPDump, which directly inspects network traffic.

Additional Context:

SYN Flood Mechanics: In a SYN flood, attackers send SYN packets to open ports, filling the server’s TCP connection queue (controlled by /proc/sys/net/ipv4/tcp_max_syn_backlog in Linux). This consumes memory, causing sluggishness, as observed.

TCPDump Usage Example: The administrator could run tcpdump -i eth0 -nn 'tcp[tcpflags] & (tcp-syn) != 0 and tcp[tcpflags] & (tcp-ack) = 0' to capture SYN-only packets, revealing a flood of half-open connections from multiple source IPs.

Mitigation: If confirmed, the administrator could adjust TCP settings (e.g., enable SYN cookies with sysctl -w net.ipv4.tcp_syncookies=1) or use a firewall to rate-limit SYN packets.

CS0-003 Relevance: The exam includes performance-based questions (PBQs) testing network traffic analysis with tools like TCPDump to diagnose attacks like DoS.

Reference:

CompTIA CySA+ (CS0-003) Exam Objectives, Domains 1 (Security Operations) and 3 (Incident Response and Management), www.comptia.org, covering network traffic analysis and DoS attack identification.

CompTIA CySA+ Study Guide: Exam CS0-003 by Chapple and Seidl, discussing TCPDump for analyzing network-based attacks like SYN floods.

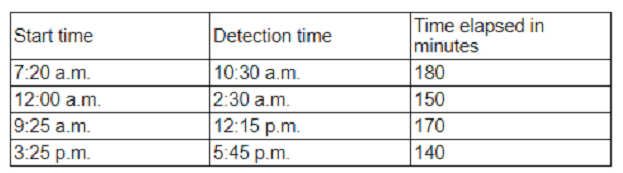

An organization has tracked several incidents that are listed in the following table:

Which of thefollowing is the organization's MTTD?

A. 140

B. 150

C. 160

D. 180

Explanation:

The question asks for the organization’s Mean Time to Detect (MTTD) based on a table of incidents, with options of 140, 150, 160, or 180 (presumably in minutes). MTTD is the average time from when a security incident occurs (e.g., a compromise) to when it is detected by the security team. However, the incident table referenced in the question is not provided, making it impossible to calculate the exact MTTD or select the correct answer. For the CS0-003 exam’s Incident Response and Management (Domain 3) and Security Operations (Domain 1) objectives, I’ll outline how to calculate MTTD and evaluate the options based on typical incident data analysis.

How to Calculate MTTD

Definition: MTTD is the average time taken to detect incidents across a set of events. It’s calculated as:

Sum the detection times for all incidents (time from compromise to detection).

Divide by the number of incidents.

Formula: MTTD = Σ(Detection Time - Compromise Time) / Number of Incidents.

Required Data: The incident table would typically include:

Compromise Time: When each incident occurred (e.g., unauthorized login, malware execution).

Detection Time: When the security team was alerted (e.g., via SIEM, IDS, or user report).

Incident Count: The number of incidents listed.

Example:

Incident 1: Compromise at 10:00 AM, detected at 10:30 AM (30 minutes).

Incident 2: Compromise at 11:00 AM, detected at 12:20 PM (80 minutes).

Incident 3: Compromise at 1:00 PM, detected at 4:00 PM (180 minutes).

MTTD = (30 + 80 + 180) / 3 = 290 / 3 ≈ 96.67 minutes.

CS0-003 Context: The exam tests incident response metrics like MTTD, often through performance-based questions (PBQs) requiring analysis of logs or incident timelines to calculate averages.

Why No Option Can Be Selected:

Missing Table: The question references a table of incidents, but without specific data (e.g., compromise and detection timestamps for each incident), the MTTD cannot be computed.

Options (140, 150, 160, 180): These are likely in minutes, but without the table, we can’t determine which matches the calculated MTTD.

For example:

If the table listed 3 incidents with detection times of 120, 150, and 150 minutes, MTTD = (120 + 150 + 150) / 3 = 140 minutes (option A).

Without data, all options (140, 150, 160, 180) are equally plausible or implausible.

Evaluating Each Option:

A. 140

Reason: Could be correct if the table shows incidents with an average detection time of 140 minutes, but no data confirms this.

B. 150

Reason: Possible if the average detection time across incidents is 150 minutes, but the lack of table data prevents verification.

C. 160

Reason: Would require the table to show an average detection time of 160 minutes, but no evidence supports this.

D. 180

Reason: Could be correct if the incidents average 180 minutes to detect, but without the table, this cannot be confirmed.

Incident Analysis Process:

Steps:

Extract Timestamps: From the table, note the compromise time and detection time for each incident.

Calculate Differences: Subtract compromise time from detection time for each incident.

Compute Average: Sum the differences and divide by the number of incidents.

Compare to Options: Match the calculated MTTD to the closest option (140, 150, 160, or 180).

Reference:

CompTIA CySA+ (CS0-003) Exam Objectives, Domains 1 (Security Operations) and 3 (Incident Response and Management), www.comptia.org, covering incident response metrics like MTTD.

CompTIA CySA+ Study Guide: Exam CS0-003 by Chapple and Seidl, discussing how to calculate MTTD from incident data.

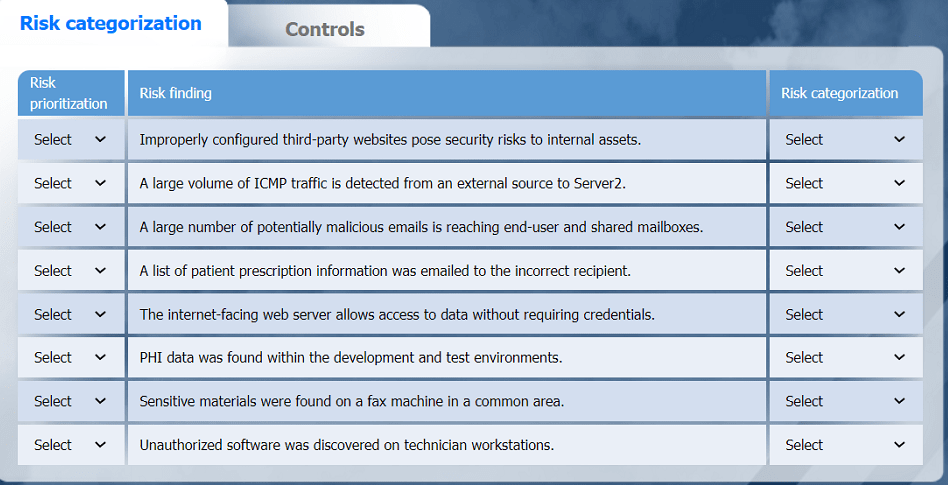

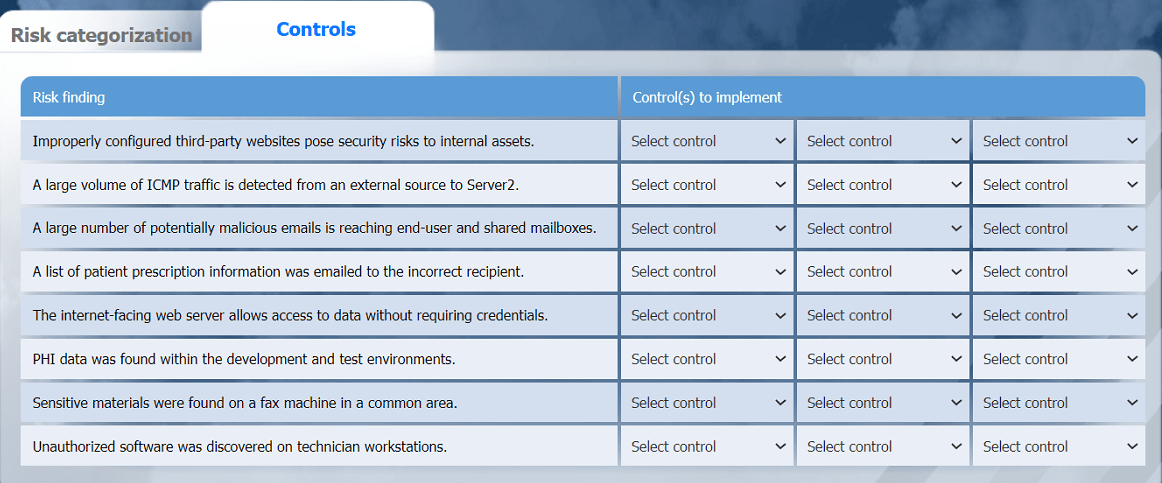

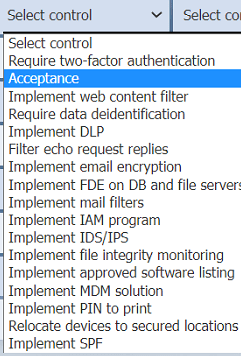

A healthcare organization must develop an action plan based on the findings from a risk

assessment. The action plan must consist of:

· Risk categorization

· Risk prioritization

. Implementation of controls

INSTRUCTIONS

Click on the audit report, risk matrix, and SLA expectations documents to review their

contents.

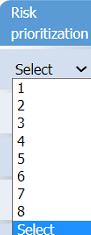

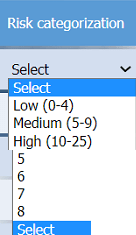

On the Risk categorization tab, determine the order in which the findings must be

prioritized for remediation according to the risk rating score. Then, assign a categorization

to each risk.

On the Controls tab, select the appropriate control(s) to implement for each risk finding.

Findings may have more than one control implemented. Some controls may be used

more than once or not at all.

If at any time you would like to bring back the initial state of the simulation, please click

the Reset All button.

Without access to the specific documents (Audit Report, Risk Matrix, SLA Expectations) or tabs (Risk Categorization, Controls), a definitive answer cannot be provided. However, I’ll outline the process a security analyst would follow to develop an action plan for a healthcare organization based on a risk assessment, addressing Risk Categorization, Risk Prioritization, and Implementation of Controls. This aligns with the CS0-003 exam’s Vulnerability Management (Domain 2), Security Operations (Domain 1), and Reporting and Communication (Domain 4) objectives, which emphasize risk assessment and mitigation strategies.

General Approach to Developing the Action Plan:

The task involves reviewing risk assessment findings, prioritizing them based on risk rating scores, categorizing risks, and selecting appropriate controls, tailored to a healthcare organization’s context (e.g., HIPAA compliance). Since the documents and tabs are not provided, I’ll describe the standard process and provide a hypothetical example to illustrate how to address the requirements.

Step 1: Risk Categorization

Objective: Assign each finding a risk category based on its nature and impact, typically aligned with frameworks like NIST SP 800-53 or HIPAA requirements.

Process:

Review Findings: Examine the Audit Report for vulnerabilities or risks (e.g., unpatched systems, weak authentication, data exposure)

Assign Categories: Common categories in healthcare include:

Confidentiality: Risks to patient data privacy (e.g., unprotected PII/PHI).

Integrity: Risks to data accuracy (e.g., unauthorized modifications).

Availability: Risks to system uptime (e.g., DoS vulnerabilities).

Compliance: Risks violating regulations (e.g., HIPAA non-compliance).

Finding: “Outdated EHR software” → Category: Compliance/Availability.

Finding: “Weak MFA on VPN” → Category: Confidentiality/Integrity.

CS0-003 Alignment: Domain 2 emphasizes categorizing risks based on assessment findings to align with organizational priorities and compliance needs.

Step 2: Risk Prioritization

Objective: Order findings for remediation based on risk rating scores, typically derived from the Risk Matrix and SLA Expectations.

Process:

Risk Rating Scores: Use the Risk Matrix to assign scores (e.g., CVSS scores, qualitative ratings like High/Medium/Low, or numerical scales like 1–100) based on:

Likelihood: Probability of exploitation (e.g., public exploit available).

Impact: Severity of consequences (e.g., PHI exposure, system downtime).

SLA Expectations: Prioritize based on remediation timelines (e.g., Critical risks within 7 days, High within 14 days, Medium within 30 days).

Order Findings: Sort from highest to lowest risk score, prioritizing Critical/High risks for remediation within SLA timelines.

Example:

Finding 1: “Unencrypted PHI” (CVSS 9.8, Critical) → Priority 1.

Finding 2: “Outdated EHR software” (CVSS 7.5, High) → Priority 2.

Finding 3: “Weak MFA on VPN” (CVSS 5.0, Medium) → Priority 3.

Finding 4: “Unnecessary open port” (CVSS 3.0, Low) → Priority 4.

CS0-003 Alignment: Domain 2 tests prioritizing vulnerabilities based on risk scores and organizational policies, often in performance-based questions (PBQs).

Step 3: Implementation of Controls

Objective: Select appropriate controls for each risk finding, with some findings requiring multiple controls and some controls reused across findings.

Process:

Review Findings: Match controls to the nature of each risk, considering healthcare requirements (e.g., HIPAA, NIST).

Available Controls: Common controls include:

Technical: Encryption, patching, MFA, firewalls, IDS/IPS, WAF.

Administrative: Policies, training, access reviews.

Physical: Secure server rooms, badge access.

Select Controls: Choose based on effectiveness, feasibility, and compliance. Multiple controls may apply to a single finding; some controls may be reused.

Example:

Finding 1: Unencrypted PHI

Controls: Enable AES-256 encryption for data at rest, deploy DLP to monitor data exfiltration.

Finding 2: Outdated EHR software

Controls: Apply vendor patches, implement WAF to mitigate exploits.

Finding 3: Weak MFA on VPN

Controls: Enforce strong MFA (e.g., token-based), update access policies.

Finding 4: Unnecessary open port

Controls: Configure firewall to close port, conduct regular port scans.

CS0-003 Alignment: Domains 1 and 2 emphasize selecting and implementing controls to mitigate risks, ensuring compliance and security.

Hypothetical Example:

Assume the Audit Report lists:

Finding 1: Unencrypted PHI on database server (CVSS 9.8, Critical).

Finding 2: Outdated web server software (CVSS 7.5, High).

Finding 3: Weak password policies (CVSS 5.5, Medium).

Finding 4: Open port 23 (Telnet) (CVSS 4.0, Medium).

Risk Categorization:

Finding 1: Confidentiality/Compliance (exposes PHI, violates HIPAA).

Finding 2: Availability/Compliance (exploitable software risks downtime).

Finding 3: Confidentiality/Integrity (weak passwords risk unauthorized access).

Finding 4: Confidentiality (Telnet is unencrypted, risks data interception).

Risk Prioritization:

Order: Finding 1 (Critical, CVSS 9.8) → Finding 2 (High, CVSS 7.5) → Finding 3 (Medium, CVSS 5.5) → Finding 4 (Medium, CVSS 4.0).

SLA: Critical (7 days), High (14 days), Medium (30 days).

Controls:

Finding 1: Encryption (AES-256), DLP deployment, access controls.

Finding 2: Patch management, WAF configuration.

Finding 3: Password policy enforcement, MFA implementation.

Finding 4: Firewall rule to close port 23, network monitoring.

Why No Definitive Answer:

Missing Documents: The question references an Audit Report, Risk Matrix, and SLA Expectations, but these are not provided, preventing specific categorization, prioritization, or control selection.

Tabs Unavailable: The Risk Categorization and Controls tabs are not accessible, so I cannot assign specific categories or controls to findings.

Options Implied: The task implies a PBQ where findings are listed with risk scores, and controls are selected from a list, but without data, I can only describe the process.

Reference:

CompTIA CySA+ (CS0-003) Exam Objectives, Domains 1 (Security Operations), 2 (Vulnerability Management), and 4 (Reporting and Communication), www.comptia.org, covering risk assessment, prioritization, and control implementation.

CompTIA CySA+ Study Guide: Exam CS0-003 by Chapple and Seidl, discussing risk management and mitigation in healthcare environments.

A SOC manager receives a phone call from an upset customer. The customer received a vulnerability report two hours ago: but the report did not have a follow-up remediation response from an analyst. Which of the following documents should the SOC manager review to ensure the team is meeting the appropriate contractual obligations for the customer?

A. SLA

B. MOU

C. NDA

D. Limitation of liability

Explanation:

An SLA (Service Level Agreement) is a formal document that defines the agreed-upon level of service between a service provider (like a SOC) and a customer. It outlines:

Response and resolution timeframes for different types of incidents or reports

Expectations around communications and follow-up

Performance metrics, including escalation paths if expectations are not met

In this case, the customer received a vulnerability report but did not get a timely follow-up, which indicates a possible violation of the SLA terms.

Why the Other Options Are Incorrect:

MOU (Memorandum of Understanding): A non-binding agreement showing intent to collaborate, not used for enforceable response times.

NDA (Non-Disclosure Agreement): Protects confidentiality of shared information, irrelevant to service delivery timelines.

Limitation of Liability: Specifies financial/legal bounds in case of damage or loss, not service responsibilities.

Reference:

CompTIA CySA+ (CS0-003) Official Exam Objectives:

"Given a scenario, use appropriate cybersecurity data sources to support an investigation — including SLAs for response/resolution times."

When starting an investigation, which of the following must be done first?

A. Notify law enforcement

B. Secure the scene

C. Seize all related evidence

D. Interview the witnesses

Explanation:

The question asks which step must be done first when starting an investigation, likely in the context of a cybersecurity incident or digital forensics investigation, as relevant to the CS0-003 exam’s Incident Response and Management (Domain 3) objective. Securing the scene is the first critical step to ensure the integrity of evidence and prevent further compromise, aligning with standard incident response and forensic procedures.

Why B is Correct:

Securing the Scene: In a cybersecurity investigation, securing the scene involves isolating affected systems (e.g., disconnecting from the network, powering down non-critical systems in a controlled manner) to prevent further damage, tampering, or data loss. This preserves evidence (e.g., logs, memory, files) and maintains the chain of custody.

Incident Response Process: The CS0-003 exam follows frameworks like NIST SP 800-61, which outlines incident response phases: Preparation, Identification, Containment, Eradication, Recovery, and Lessons Learned. Securing the scene is part of the Containment phase, which begins immediately after identifying an incident to limit its impact and preserve evidence.

Why First: Securing the scene is prioritized to:

Prevent the attacker from covering their tracks (e.g., deleting logs).

Stop ongoing damage (e.g., ransomware encryption).

Ensure evidence remains intact for later analysis.

Example: If a server is compromised, the analyst might isolate it by disconnecting it from the network (while keeping it powered on for volatile memory capture) before proceeding with evidence collection or interviews.

Why Other Options Are Wrong:

A. Notify law enforcement

Reason: Notifying law enforcement may be required for serious incidents (e.g., data breaches involving PII), but it’s not the first step. Notification typically occurs after initial containment and evidence preservation to ensure critical information is secured and the incident is understood. Premature notification could delay response efforts or lack sufficient details to be actionable.

C. Seize all related evidence

Reason: Seizing evidence (e.g., capturing logs, memory dumps, or hard drives) is critical but comes after securing the scene. Without first isolating systems, evidence could be altered, deleted, or corrupted by ongoing attacker activity or system processes. Securing the scene ensures evidence integrity before collection.

D. Interview the witnesses

Reason: Interviewing witnesses (e.g., employees who observed suspicious activity) provides valuable context but is not the first step. Witnesses are interviewed during the Identification or Analysis phase to gather details, but securing the scene takes precedence to prevent evidence loss or further compromise.

Additional Context:

CS0-003 Relevance: Domain 3 emphasizes incident response procedures, including preserving evidence and following a structured process. Securing the scene is a foundational step in digital forensics to maintain evidence admissibility (e.g., for legal or regulatory purposes).

Healthcare Context: In a healthcare organization (per previous questions), securing the scene is critical to protect PHI (Protected Health Information) under HIPAA, preventing further data exposure during a breach.

Tools/Techniques: Securing the scene may involve:

Network isolation (e.g., firewall rules, VLAN changes).

Physical isolation (e.g., unplugging network cables, not powering off to preserve RAM).

Documenting the environment (e.g., taking photos, noting system states).

Reference:

CompTIA CySA+ (CS0-003) Exam Objectives, Domain 3 (Incident Response and Management), www.comptia.org, covering incident response processes and evidence preservation.

CompTIA CySA+ Study Guide: Exam CS0-003 by Chapple and Seidl, discussing the importance of securing the scene in digital forensics and incident response.

Which of the following tools would work best to prevent the exposure of PII outside of an organization?

A. PAM

B. IDS

C. PKI

D. DLP

Explanation:

DLP (Data Loss Prevention) is the best tool to prevent exposure of PII (Personally Identifiable Information) outside the organization. It works by:

Monitoring data in use, in motion, and at rest.

Identifying sensitive information, such as names, SSNs, credit card numbers, etc.

Blocking or alerting when attempts are made to send PII externally via email, USB, cloud storage, or other means.

Enforcing policies to protect data from unauthorized transmission or leakage.

This makes it ideal for compliance with privacy laws like GDPR, HIPAA, and others.

Why the Other Options Are Incorrect:

PAM (Privileged Access Management): Controls access for privileged users but doesn’t monitor or prevent data leakage directly.

IDS (Intrusion Detection System): Detects malicious activity but doesn’t prevent data exfiltration of sensitive info like PII.

PKI (Public Key Infrastructure): Manages encryption and certificates, useful for secure communication but not for monitoring or blocking PII transfers.

Reference:

CompTIA CySA+ (CS0-003) Official Exam Objectives, Domain 2.3:

"Explain the purpose of data loss prevention (DLP) and how it applies to data in use, motion, and at rest."

Two employees in the finance department installed a freeware application that contained embedded malware. The network is robustly segmented based on areas of responsibility. These computers had critical sensitive information stored locally that needs to be recovered. The department manager advised all department employees to turn off their computers until the security team could be contacted about the issue. Which of the following is the first step the incident response staff members should take when they arrive?

A. Turn on all systems, scan for infection, and back up data to a USB storage device.

B. Identify and remove the software installed on the impacted systems in the department

C. Explain that malware cannot truly be removed and then reimage the devices.

D. Log on to the impacted systems with an administrator account that has privileges to perform backups.

E. Segment the entire department from the network and review each computer offline.

Explanation:

The first step in incident response when dealing with a malware infection—especially when sensitive data is involved—is containment. Since the department already has been advised to shut down their systems, the logical and secure next move is to isolate the affected environment (i.e., the department) from the rest of the network to prevent any lateral movement or data exfiltration by the malware.

By segmenting the department from the network and reviewing systems offline, the security team can:

Analyze systems without the malware potentially communicating with external C2 (command and control) servers.

Minimize further contamination to other parts of the network.

Safely recover critical data and assess the damage in a forensically sound manner.

Why the Other Options Are Wrong:

"Turn on all systems, scan for infection, and back up data to a USB..."

Booting infected systems without precautions risks activating the malware and allowing it to spread or destroy data

.

"Identify and remove the software..."

Malware can install deeper components or persistence mechanisms. Removal should come after containment and proper analysis.

"Explain that malware cannot truly be removed and reimage..."

This might be a later step, but doing this first could result in loss of forensic evidence and unrecovered data.

"Log on with admin account to back up data..."

This risks further compromise. You should not use privileged accounts on possibly infected systems before containment.

Reference:

NIST SP 800-61 Rev. 2 – Computer Security Incident Handling Guide

Phase: Containment, Eradication, and Recovery

Which Of the following techniques would be best to provide the necessary assurance for embedded software that drives centrifugal pumps at a power Plant?

A. Containerization

B. Manual code reviews

C. Static and dynamic analysis

D. Formal methods

Explanation:

Formal methods are mathematically based techniques used to specify, develop, and verify software and hardware systems with high assurance and safety requirements—exactly the kind of environment found in critical infrastructure like power plants.

Since embedded software driving centrifugal pumps in a power plant must meet extremely high safety, reliability, and integrity standards, formal methods are the best fit. They allow for:

Mathematical proof of correctness of the software relative to its specification.

Elimination of ambiguities in software behavior.

Assurance that faults or unsafe states are impossible under all conditions.

This level of rigor is typically mandated in systems where failure could result in loss of life, major environmental damage, or system-wide outages—such as in nuclear plants, avionics, or industrial control systems.

Why Other Options Are Not Ideal:

Containerization:

Useful for isolating applications in user space but not applicable to embedded systems on physical hardware with real-time control needs.

Manual code reviews:

Helpful for catching logic and syntax errors but subject to human error and not scalable or provable for safety-critical software.

Static and dynamic analysis:

Good for identifying bugs and runtime issues, but do not provide full assurance or mathematically provable guarantees of correctness.

Reference:

NIST SP 800-160 Vol. 1 – Systems Security Engineering: Considerations for a Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems

ISO/IEC 61508 – Functional Safety of Electrical/Electronic/Programmable Electronic Safety-related Systems.

After an incident, a security analyst needs to perform a forensic analysis to report complete information to a company stakeholder. Which of the following is most likely the goal of the forensic analysis in this case?

A. Provide a full picture of the existing risks.

B. Notify law enforcement of the incident.

C. Further contain the incident.

D. Determine root cause information.

Explanation:

After an incident has occurred, a forensic analysis aims to reconstruct the timeline, identify how the attack happened, what systems or data were affected, and who may have been responsible. This process is essential to:

Understand how the incident originated.

Identify technical vulnerabilities or security failures.

Prevent recurrence of the same issue.

This aligns with the root cause analysis process, which is a core goal of digital forensics post-incident. The findings are often shared with stakeholders to support decision-making on remediation, risk management, and possibly legal action.

Why the Other Options Are Incorrect:

Provide a full picture of the existing risks:

That’s the goal of a risk assessment, not forensic analysis.

Notify law enforcement of the incident:

This may occur based on the findings, but it is not the purpose of forensic analysis itself.

Further contain the incident:

Containment is part of the incident response phase, typically done before forensic analysis begins.

Reference:

NIST SP 800-86: Guide to Integrating Forensic Techniques into Incident Response

NIST SP 800-61r2: Computer Security Incident Handling Guide

| Page 5 out of 45 Pages |