Topic 1: Main Questions

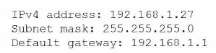

Some users are unable to access their workstations. An administrator runs ipconfig on oneof the workstations and sees the following:

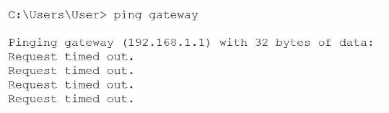

The administrator runs the following command and receives this output:

Which of the following is the source of issue?

A. Web server

B. Router

C. DNS

D. DHCP

Explanation:

The administrator ran ping gateway and received "Request timed out" responses, indicating that the workstation cannot reach its default gateway at 192.168.1.1. The default gateway is the router that connects the local network to other networks (including the internet). Since the workstation has a valid IP address (192.168.1.27) and subnet mask (255.255.255.0), local network communication should work, but the failure to ping the gateway means the router is either down, misconfigured, or not responding. Without access to the router, users cannot reach resources outside their local subnet, including other workstations on different subnets and the internet.

Why other options are incorrect:

A. Web server:

The web server is a specific resource beyond the local network. The ping failure to the gateway occurs before any traffic can reach the web server. Even if the web server were down, the gateway would still respond to ping.

C. DNS:

DNS resolves domain names to IP addresses. The administrator pinged "gateway" by IP address (192.168.1.1), so DNS resolution was not required. The timeout indicates a network layer issue, not a name resolution problem.

D. DHCP:

The workstation has a valid IP address (192.168.1.27) in the correct subnet, indicating DHCP successfully assigned an address. DHCP is not involved in ongoing connectivity once an address is obtained.

Reference:

CompTIA A+ Core 1 (220-1201) Objective 2.6 and 5.3: Troubleshooting network connectivity. The default gateway (router) must be reachable for traffic to leave the local subnet. Ping failures to the gateway isolate the issue to the router or Layer 3 connectivity.

Which of the following printing initiatives would be best to accomplish environmentally friendly objectives?

A. Requiring user authentication for printing

B. Locking down printing to only certain individuals

C. Modifying duplex settings to double-sided

D. Changing the print quality settings to best

Explanation:

The most direct way to achieve "environmentally friendly" goals in a printing environment is to reduce the consumption of physical resources—specifically paper and ink/toner. Duplex printing allows the printer to print on both sides of a single sheet of paper. By setting this as the default (or "modifying" it to be mandatory), a company can theoretically reduce its paper usage by up to 50%. This is a standard green initiative in most corporate environments to lower the "carbon footprint" and reduce waste.

+2

Why the Other Options are Incorrect:

A. Requiring user authentication:

Also known as "Pull Printing" or "Badge Printing," this requires a user to be physically present at the printer to release the job. While this does reduce waste (by preventing forgotten stacks of paper), its primary purpose is usually security and confidentiality, not specifically environmental friendliness.

B. Locking down printing to individuals:

This is an access control measure. While it might lower overall volume, it is a restrictive management policy rather than an "environmentally friendly" configuration setting.

D. Changing quality settings to best:

This is the opposite of an eco-friendly objective. "Best" quality uses the maximum amount of ink or toner and often slows down the printing process. To be environmentally friendly, you would choose "Draft" or "Toner Saver" mode.

+1

References:

CompTIA A+ Exam Objectives (220-1201): Objective 3.7 – "Given a scenario, install and configure multifunction devices/printers and settings." (Focus: Configuration settings like duplex, orientation, and quality).

A group of friends is gathering in a room to play video games. One of the friends has a game server. Which of the following network types should the group use so they can all connect to the same server and the internet?

A. SAN

B. MAN

C. LAN

D. PAN

Explanation:

A Local Area Network (LAN) is a network that connects devices within a limited geographic area, such as a single room, home, or building. In this scenario, friends gathering in a room to connect to a game server need a LAN. They can connect their gaming devices to a switch or wireless access point, which connects to a router providing internet access. This allows all devices to communicate with each other (including the game server) and share the internet connection.

Why other options are incorrect:

A. SAN (Storage Area Network):

A SAN is a specialized high-speed network that provides block-level storage access to servers. It is used for enterprise storage, not for gaming with friends.

B. MAN (Metropolitan Area Network):

A MAN covers a larger geographic area than a LAN, typically spanning a city or campus. It is too large for a single-room gathering.

D. PAN (Personal Area Network):

A PAN connects devices within an individual's personal space (typically within 10 meters), such as Bluetooth headphones connecting to a phone. It is too small to connect multiple people gaming together.

Reference:

CompTIA A+ Core 1 (220-1201) Objective 2.2: "Compare and contrast common networking hardware... Network types including LAN (Local Area Network) for local device connectivity."

A management team is concerned about enterprise devices that do not have any controls in place. Which of the following should an administrator implement to address this concern?

A. MDM

B. MFA

C. vpn

D. SSL

Explanation:

MDM is a type of security software used by an IT department to monitor, manage, and secure employees' mobile devices (laptops, smartphones, tablets) that are deployed across an organization. When management is concerned about a lack of controls, MDM provides the necessary tools to:

Enforce security policies (like requiring a passcode).

Remotely wipe data if a device is lost or stolen.

Control which applications can be installed.

Monitor device health and location.

Why the Other Options are Incorrect:

B. MFA (Multi-Factor Authentication):

This is a security process that requires more than one method of authentication (e.g., a password plus a text code). While it secures user accounts, it does not provide "control" over the device itself, its settings, or its hardware.

C. VPN (Virtual Private Network):

A VPN creates a secure, encrypted tunnel for data to travel over a public network. It protects data in transit, but it does not allow an administrator to manage the device's configuration or enforce company policies.

D. SSL (Secure Sockets Layer):

SSL (now largely replaced by TLS) is a protocol for establishing authenticated and encrypted links between networked computers (like a web browser and a server). It is a communication security tool, not a device management solution.

References:

CompTIA A+ Exam Objectives (220-1201): Objective 1.4 – "Given a scenario, configure basic mobile-device business and professional features." (Specifically MDM, MAM, and corporate email).

A user keeps getting an error message when trying to print a letter. The printer is normally used to print cards and invitations. The user tries printing from a different computer, but the issue persists. Which of the following should the user do to resolve the issue?

A. Print fewer pages.

B. Print on card stock

C. Replace the rollers.

D. Change the paper tray setting.

Explanation:

The printer is normally used for cards and invitations (which use thicker paper or card stock), but the user is now trying to print a standard letter. The most likely issue is that the paper tray setting does not match the loaded paper. Many printers have adjustable paper tray configurations or driver settings that specify the paper type loaded. If the printer expects card stock (thick paper) but the user loaded regular letter paper without updating the setting, the printer may display an error or refuse to print. Changing the paper tray setting to match the actual paper in use (plain letter paper) will resolve the issue.

Why other options are incorrect:

A. Print fewer pages:

The error is related to paper type mismatch, not the number of pages. Reducing the page count does not address the underlying configuration issue.

B. Print on card stock:

The user needs to print a letter on regular paper, not card stock. Printing on card stock would waste expensive paper and not meet the requirement.

C. Replace the rollers:

Worn rollers cause paper jams or misfeeds, not paper type mismatch errors. The printer functions normally for its usual card stock printing.

Reference:

CompTIA A+ Core 1 (220-1201) Objective 3.6: "Given a scenario, configure printers... Paper tray settings and paper type configuration to match loaded media and prevent errors."

Which of the following is the best to use when testing a file for potential malware?

A. Multitenancy

B. Test development

C. Cross-platform virtualization

D. Sandbox

Explanation:

A Sandbox is an isolated testing environment that allows users to execute suspicious files or programs without risking damage to the host machine or the network. Think of it as a "digital petri dish." When you run a file in a sandbox, the software is tricked into thinking it has full access to a real system, but in reality, all its actions are contained within a restricted area. If the file turns out to be malware, it can be deleted, and the sandbox can be "wiped" clean, leaving the rest of the computer completely untouched.

Why the Other Options are Incorrect:

A. Multitenancy:

This is a cloud computing concept where a single instance of software (or hardware) serves multiple different customers (tenants). While it's efficient for resource sharing in the cloud, it has nothing to do with testing malicious files.

B. Test development:

This is a broad phase in the software development lifecycle (SDLC) where tests are created to ensure code works as intended. It is a process, not a specific tool or environment used for malware analysis.

C. Cross-platform virtualization:

This refers to running an operating system (like Linux) on hardware designed for another (like a Mac with an ARM chip). While you could run a sandbox inside a virtual machine, the term "cross-platform" describes the compatibility, not the security isolation required for malware testing.

References:

CompTIA A+ Exam Objectives (220-1201): Objective 4.2 – "Summarize aspects of client-side virtualization." (Focus: Purpose of virtual machines and sandboxing).

Which of the following types of RAM is typically used in servers?

A. SODIMM

B. Rambus

C. DDR3

D. ECC

Explanation:

ECC (Error-Correcting Code) RAM is the type of memory typically used in servers and workstations where data integrity and system stability are critical. ECC memory can detect and correct common types of internal data corruption, preventing crashes and data corruption in mission-critical applications. While ECC RAM is available in DDR3, DDR4, and DDR5 formats, the defining characteristic for server use is the ECC functionality itself, not the specific DDR generation.

Why other options are incorrect:

A. SODIMM:

SODIMM (Small Outline Dual In-line Memory Module) is a physical form factor designed for laptops and small form factor devices due to its smaller size. Servers typically use standard DIMMs, not SODIMMs.

B. Rambus:

Rambus (RDRAM) was a proprietary memory technology used briefly in the early 2000s. It is obsolete and not used in modern servers.

C. DDR3:

DDR3 is a generation of memory technology, not a server-specific feature. Servers may use DDR3, DDR4, or DDR5, often with ECC functionality.

Reference:

CompTIA A+ Core 1 (220-1201) Objective 3.2: "Compare and contrast storage devices and memory... Memory technologies including ECC vs. non-ECC RAM and their use cases in servers versus desktops."

A technician is setting up a new SOHO wireless router. According to security best practices, which of the following should the technician do first?

A. Enable encryption.

B. Assign a static IP.

C. Change the default password.

D. Reset the router.

Explanation:

According to security best practices, the very first action a technician should take when configuring a new SOHO (Small Office/Home Office) router is to change the default administrative password. Manufacturers ship routers with well-known default credentials (e.g., username: admin, password: password). Attackers can easily find these online and gain full control over the network if they are not changed immediately. While other security measures are important, they are secondary to securing the management interface of the device itself.

Why the Other Options are Incorrect:

A. Enable encryption::

While configuring WPA3 (or WPA2) is critical for securing wireless data, you should secure the router's "brain" (the admin account) first. Many modern routers also have encryption enabled by default with a unique key, but they often still use a generic admin password.

B. Assign a static IP:

In a SOHO environment, the router usually acts as the gateway and typically has a static internal IP by default (like 192.168.1.1). Assigning a static external IP is a service provided by an ISP and is not a security best practice for initial setup.

C. Reset the router:

A "Reset" is used to return a router to its factory default settings. Since the technician is setting up a new router, it is already at factory defaults. Resetting it would be redundant and would not improve security.

References:

CompTIA A+ Exam Objectives (220-1201): Objective 2.3 – "Given a scenario, configure and personalize a SOHO wired/wireless network."

A user brings a laptop to work every morning, correctly seats it in the docking station and then opens the laptop to begin work with no issues After the user left the laptop at home during a two-week vacation the laptop is no longer working. Upon returning to the office, the user reports that the keyboard and display are no longer working Which of the following should the technician ask the user to do first?

A. Ensure the docking station is plugged in.

B. Press and release the laptop power button.

C. Plug the laptop in and let it charge overnight

D. Connect the laptop directly to the network.

Which of the following wireless frequency ranges involves the use of channels 1, 6, and 11?

A. 2.4GHz

B. 5GHz

C. 6GHz

D. 60GHz

Explanation:

In the 2.4GHz frequency range, there are 11 available channels (in the US), but they are only $22\text{ MHz}$ wide and overlap significantly. To prevent Co-Channel Interference (where routers interfere with one another), technicians must use channels that do not overlap. Channels 1, 6, and 11 are the only three channels in the 2.4GHz spectrum that have enough "space" between them to operate simultaneously without overlapping.

Why the Other Options are Incorrect:

B. 5GHz:

This range offers many more channels (up to 25 non-overlapping channels). Channels in this range are identified by much higher numbers (e.g., 36, 40, 44, 149) and do not follow the 1, 6, 11 scheme.

C. 6GHz:

Introduced with Wi-Fi 6E, this band provides even more spectrum (up to 14 additional $80\text{ MHz}$ channels). Like the 5GHz band, it uses a different numbering system and does not suffer from the same limited-channel overlap issues as 2.4GHz.

D. 60GHz:

This is used by standards like 802.11ad (WiGig). It is intended for very high-speed, short-range line-of-sight communication and uses an entirely different channelization structure.

References:

CompTIA A+ Exam Objectives (220-1201): Objective 2.4 – "Compare and contrast wireless networking protocols."

| Page 7 out of 35 Pages |