A security administrator is configuring fileshares. The administrator removed the default permissions and added permissions for only users who will need to access the fileshares as part of their job duties. Which of the following best describes why the administrator performed these actions?

A. Encryption standard compliance

B. Data replication requirements

C. Least privilege

D. Access control monitoring

Explanation

The scenario describes a fundamental security practice: starting with restrictive access and only granting the minimum permissions necessary for a specific role or task.

Why C. Least Privilege is the Correct Answer

The Principle of Least Privilege is a core cybersecurity concept that mandates users and processes should only have the minimum level of access—permissions, rights, and privileges—necessary to perform their authorized tasks and nothing more.

Let's break down the administrator's actions against this principle:

"Removed the default permissions":

Operating systems often set overly permissive default permissions on new fileshares (e.g., "Everyone: Read" or "Authenticated Users: Modify"). These defaults are designed for ease of use, not security. By removing them, the administrator is eliminating broad, unnecessary access that could be exploited.

"Added permissions for only users who will need to access the fileshares as part of their job duties":

This is the direct application of least privilege. The administrator is meticulously granting access on a need-to-know and need-to-do basis. Only individuals with a verified business requirement can access the resource, and they are granted only the specific type of access they need (e.g., Read, Write, Modify).

The goal of these actions is to reduce the attack surface. If a user account is compromised, the attacker can only access the fileshares that the specific user was permitted to use. This contains the damage and prevents lateral movement across the network.

Why the Other Options Are Incorrect

A. Encryption standard compliance

What it is:

Encryption compliance (e.g., following standards like FIPS 140-2, or regulations like GDPR that may mandate encryption) refers to ensuring data is encrypted both at rest (on the disk) and in transit (across the network).

Why it's incorrect:

The scenario describes configuring permissions (access control lists), not encryption. While controlling access is a part of protecting data, it is distinct from the act of scrambling data using cryptographic algorithms. The actions taken do not implement or enforce encryption.

B. Data replication requirements

What it is:

Data replication involves copying and synchronizing data across multiple storage systems or locations for purposes like redundancy, disaster recovery, or availability (e.g., having a failover server in another data center).

Why it's incorrect:

Configuring permissions on a single fileshare has absolutely no bearing on how or where data is copied. This is a function of storage management and backup solutions, not user access control.

D. Access control monitoring

What it is:

Access control monitoring is the process of auditing and reviewing who accessed what resources, when, and what actions they performed. This is a detective security control implemented through logging, SIEM systems, and periodic audits.

Why it's incorrect:

The administrator is configuring access controls, not monitoring them. They are setting the rules that will later be enforced and potentially monitored. The action described is preventative (stopping unauthorized access before it happens), not detective (logging it after it happens).

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 3.7: Explain the importance of policies to organizational security.

The Principle of Least Privilege is a foundational element of virtually every organizational security policy. It is the driving force behind Access Control Policies and User Permissions and Rights Reviews. Implementing least privilege is a direct application of these policies to harden systems and protect sensitive data from unauthorized access, both malicious and accidental.

In summary:

The security administrator's actions are a textbook example of implementing the Principle of Least Privilege. They are ensuring that access to the fileshares is restricted to the minimum set of users and permissions required for business functionality, thereby enhancing the organization's overall security posture.

In which of the following scenarios is tokenization the best privacy technique 10 use?

A. Providing pseudo-anonymization tor social media user accounts

B. Serving as a second factor for authentication requests

C. Enabling established customers to safely store credit card Information

D. Masking personal information inside databases by segmenting data

Explanation

To understand why, we must first define tokenization.

Tokenization is a data security process where a sensitive data element (like a Primary Account Number - PAN) is replaced with a non-sensitive equivalent, called a token. The token has no exploitable meaning or value and is used merely as a reference to the original data.

How it works:

The sensitive data is stored in an ultra-secure, centralized system called a token vault. The vault is the only place where the token can be mapped back to the original sensitive data. The token is then used in business systems, applications, and databases where the original data would normally be used, but without the associated risk.

Key property:

The process is non-mathematical. Unlike encryption, which uses a key and a reversible algorithm to transform data, tokenization uses a database (the vault) to perform the substitution. This makes it highly resistant to cryptographic attacks.

Why C. is the Correct Answer

Tokenization is the industry-standard best practice for protecting stored payment card information. This is its most common and critical application.

The Scenario:

A company wants to allow returning customers to make quick purchases without re-entering their credit card details each time.

The Solution with Tokenization:

During the first transaction, the customer's actual credit card number (e.g., 4111 1111 1111 1111) is sent to a secure payment processor.

The processor validates the card and returns a unique, randomly generated token (e.g., f&2pL9!qXz1*8@wS) to the merchant's system.

The merchant stores this token in their customer database instead of the real credit card number.

For subsequent purchases, the customer can select their saved payment method. The system sends the token (not the real card number) to the processor to authorize the payment.

Why it's the "best" technique:

Reduces PCI DSS Scope:

The merchant's systems never store sensitive cardholder data. This dramatically simplifies their compliance with the Payment Card Industry Data Security Standard (PCI DSS), as the systems handling tokens are not subject to its most stringent requirements.

Minimizes Risk:

If the merchant's database is breached, the attackers only steal useless tokens. These tokens cannot be reversed into the original card numbers without access to the highly secured, separate token vault (which is typically managed by a PCI-compliant third-party specialist).

Why the Other Options Are Incorrect

A. Providing pseudo-anonymization for social media user accounts

Pseudo-anonymization (often just called anonymization in this context) replaces identifying fields with artificial identifiers (e.g., replacing "John Doe" with "User_58472"). While this uses a similar concept of substitution, it is not typically called "tokenization" in the security industry. More importantly, the primary goal for social media is often usability and abstracting identity, not securing highly regulated financial data. Tokenization is overkill for this purpose.

B. Serving as a second factor for authentication requests

This describes the function of a hardware or software token (like a YubiKey or Google Authenticator app) that generates a one-time password (OTP). While these devices are called "tokens," this is a different use of the word. They are used for authentication, not for data privacy and protection. The question specifically asks for a "privacy technique," which refers to protecting data at rest, not verifying identity.

D. Masking personal information inside databases by segmenting data

This describes two different techniques:

Data Masking:

Obscuring specific data within a dataset (e.g., displaying only the last four digits of a SSN: XXX-XX-1234). This is often used in development and testing environments.

Data Segmentation:

Isolating sensitive data into separate tables, databases, or networks to control access.

While valuable, these are not tokenization. Tokenization completely replaces the data value with a random token, whereas masking only hides part of it. Segmentation is an architectural control, not a data substitution technique.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 2.4: Explain the purpose of mitigation techniques used to secure the enterprise.

Specifically, it tests your knowledge of Data Protection techniques. Tokenization is a critical mitigation tool for reducing the risk associated with storing sensitive financial information. It is directly related to compliance frameworks like PCI DSS, which is a major concern for any organization handling payment cards.

Which of the following can be used to identify potential attacker activities without affecting production servers?

A. Honey pot

B. Video surveillance

C. Zero Trust

D. Geofencing

Explanation

The question asks for a security tool that serves two specific purposes:

Identify potential attacker activities:

It must act as a source of intelligence, allowing defenders to observe and study the methods, tools, and intentions of an adversary.

Without affecting production servers:

It must accomplish this goal in a way that isolates the interaction from the organization's real, live operational systems. This is crucial to ensure that the monitoring activity does not introduce risk or downtime to business-critical services.

Why A. Honey pot is the Correct Answer

A honey pot is a security mechanism specifically designed to be a decoy. It is a system or server that is intentionally set up to be vulnerable, attractive, and seemingly valuable to attackers.

How it works:

The honey pot is deployed in a controlled and monitored segment of the network, isolated from production systems. It mimics real production services (e.g., a fake database server, a web server with vulnerabilities) but contains no actual business data.

Identifying Attacker Activities:

Any interaction with the honey pot is, by definition, suspicious or malicious. Security teams can monitor everything the attacker does:

Tools used:

What exploitation scripts or scanners do they run?

Techniques:

How do they attempt to escalate privileges or move laterally?

Intent:

What are they looking for? (e.g., credit card data, intellectual property).

This provides invaluable threat intelligence on the latest attack methods.

No Effect on Production:

Because the honey pot is a isolated, fake system, any attack against it is contained. Attackers can spend time and effort compromising it without ever touching or affecting a real server. This makes it a safe and effective tool for learning about threats.

Why the Other Options Are Incorrect

B. Video surveillance

What it is:

Video surveillance involves using cameras to monitor physical spaces. It is a physical security control.

Why it's incorrect:

While video surveillance can identify the physical presence of an intruder (e.g., someone plugging a device into a server rack), it is completely useless for identifying cyber attacker activities such as network scanning, exploitation attempts, or malware deployment. It operates in a different domain (physical vs. logical) and does not interact with or monitor network traffic.

C. Zero Trust

What it is:

Zero Trust is a security model and philosophy, not a specific tool. Its core principle is "never trust, always verify." It mandates strict identity verification, micro-segmentation, and least-privilege access for every person and device trying to access resources on a network, regardless of whether they are inside or outside the network perimeter.

Why it's incorrect:

While a Zero Trust architecture mitigates attacker activities by making lateral movement and access extremely difficult, it is not primarily a tool for identifying them. It is a preventative and access control framework. Furthermore, its policies are applied directly to production systems and networks; it is not a separate, isolated decoy.

D. Geofencing

What it is:

Geofencing uses GPS or RFID technology to create a virtual geographic boundary. It is an access control mechanism that can trigger actions when a device enters or leaves a defined area (e.g., allowing access to an app only when the user is within the country, or sending an alert if a device leaves a corporate campus).

Why it's incorrect:

Geofencing is used to allow or block access based on location. It might prevent an attacker from a blocked country from accessing a production server, but it does not identify or study their activities. It is a preventative control that operates at the perimeter, not an intelligence-gathering tool that lures attackers into a safe, observable environment.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 4.2: Explain the purpose of mitigation techniques used to secure the enterprise.

Specifically, it falls under the category of Deception as a mitigation technique. Honey pots are a classic form of active deception designed to distract attackers and gather intelligence on their tactics, techniques, and procedures (TTPs). This intelligence is crucial for improving an organization's overall defenses.

A company is working with a vendor to perform a penetration test Which of the following includes an estimate about the number of hours required to complete the engagement?

A. SOW

B. BPA

C. SLA

D. NDA

Explanation

When a company engages a third-party vendor for a professional service like a penetration test, several formal documents are used to define the relationship, scope, and expectations. The question asks which document specifically outlines the projected effort, including the estimated hours for the project.

Why A. SOW is the Correct Answer

A Statement of Work (SOW) is a foundational document in project management and contracting. It provides a detailed description of the work to be performed, including deliverables, timelines, milestones, and—critically—the estimated level of effort.

Content of an SOW:

For a penetration test, the SOW would include:

Scope:

The specific systems, networks, or applications to be tested (e.g., "External IP range 192.0.2.0/24" and "the customer-facing web application portal").

Objectives:

The goals of the test (e.g., "Identify and exploit vulnerabilities to determine business impact").

Deliverables:

The tangible outputs (e.g., a detailed report of findings, an executive summary, a remediation roadmap).

Timeline:

The start date, end date, and key milestones (e.g., "Kick-off meeting on Date X," "Draft report delivered by Date Y").

Level of Effort:

An estimate of the number of hours or days required to complete each phase of the engagement (e.g., "Planning: 8 hours," "Execution: 40 hours," "Reporting: 16 hours"). This is directly tied to the project's cost.

The SOW acts as the single source of truth for what the vendor will do and how long they expect it to take, making it the correct answer.

Why the Other Options Are Incorrect

B. BPA (Blanket Purchase Agreement)

What it is:

A BPA is a simplified method of filling anticipated repetitive needs for supplies or services. It's a type of standing agreement that sets terms (like pricing and discounts) for future orders between the company and the vendor.

Why it's incorrect:

A BPA is a financial and procurement vehicle used to make future purchases easier. It does not define the specifics of a single project. You would use a BPA to order the penetration test, but the details of this specific test (like the estimated hours) would be defined in the SOW that is issued under the BPA.

C. SLA (Service Level Agreement)

What it is:

An SLA is an agreement that defines the level of service a customer can expect from a vendor. It focuses on measurable metrics like performance, availability, and responsiveness.

Why it's incorrect:

An SLA is used for ongoing services (e.g., cloud hosting, helpdesk support, managed security services). It would contain metrics like "99.9% uptime" or "critical tickets responded to within 1 hour." A penetration test is a project-based engagement, not an ongoing service. Therefore, its details are not covered in an SLA. An SLA might govern the vendor's support response time after the test, but not the hours required for the test itself.

D. NDA (Non-Disclosure Agreement)

What it is:

An NDA is a legal contract that creates a confidential relationship between parties to protect any type of confidential and proprietary information or trade secrets.

Why it's incorrect:

An NDA is crucial for a penetration test—it is signed before any work begins to ensure the vendor keeps all findings about the company's vulnerabilities strictly confidential. However, an NDA does not contain any details about the work to be performed. It only covers the privacy and handling of information exchanged during the engagement.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.3: Explain the importance of policies to organizational security.

Part of organizational security is proper third-party risk management. This involves establishing formal agreements like SOWs to clearly define the scope, responsibilities, and expectations for any external vendor performing security assessments. This ensures the engagement is effective, meets its goals, and stays within agreed-upon boundaries (like time and cost).

The marketing department set up its own project management software without telling the appropriate departments. Which of the following describes this scenario?

A. Shadow IT

B. Insider threat

C. Data exfiltration

D. Service disruption

Explanation

This scenario describes a common occurrence in many organizations where business units, seeking agility or bypassing perceived slow processes, implement technology solutions independently.

Why A. Shadow IT is the Correct Answer

Shadow IT refers to any information technology systems, devices, software, applications, or services that are managed and used without the explicit approval or oversight of an organization's central IT department.

Let's break down the scenario against this definition:

"set up its own project management software":

The marketing department is implementing a technology solution (software) on its own.

"without telling the appropriate departments":

This is the core of Shadow IT. The appropriate departments (almost always including the IT department) were not informed. IT is responsible for ensuring that software is secure, compliant, licensed, integrated with other systems, and properly backed up. By bypassing IT, the marketing department has circumvented these critical governance controls.

The Risks of Shadow IT:

Security Vulnerabilities:

The software might not be patched or could have known security flaws that IT would have identified.

Compliance Violations:

The software might store sensitive customer or company data in a way that violates regulations like GDPR, HIPAA, or PCI DSS.

Data Loss:

Without IT oversight, the data in the software might not be included in corporate backups.

Integration Issues:

The software might not work with other company systems, creating data silos and inefficiencies.

Why the Other Options Are Incorrect

B. Insider threat

What it is:

An insider threat is a security risk that originates from within the organization—typically by an employee, former employee, contractor, or business partner. The threat can be malicious (e.g., stealing data for a competitor) or unintentional (e.g., falling for a phishing scam).

Why it's incorrect:

While Shadow IT creates a vulnerability that an insider threat could exploit, the act itself is not inherently malicious. The marketing department's motivation is likely to improve its own productivity, not to harm the organization. Therefore, this scenario is not the best description.

C. Data exfiltration

What it is:

Data exfiltration is the unauthorized transfer of data from a corporate network to an external location. This is a specific malicious action, often the goal of an attack or an insider threat.

Why it's incorrect:

The scenario describes the setup of unauthorized software. It does not indicate that any data has been stolen or transferred out of the network. Data exfiltration could be a consequence of the insecure Shadow IT application, but it is not what is being described.

D. Service disruption

What it is:

A service disruption is an event that causes a reduction in the performance or availability of a service (e.g., a server crash, a network outage, a DDoS attack).

Why it's incorrect:

The scenario does not mention any service being taken offline or becoming unavailable. The marketing department is adding a new, unauthorized service, not disrupting an existing one. Again, service disruption could be a potential result if the new software conflicts with other systems, but it is not the primary term for the action taken.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.2: Explain elements of effective security governance.

Shadow IT is a direct failure of security governance. Effective governance involves establishing policies (like an Acceptable Use Policy (AUP) and Change Management processes) that require departments to work with IT for any technology procurement. This ensures that all technology used by the organization meets minimum security, compliance, and operational standards.

In summary:

The scenario is a textbook example of Shadow IT. It highlights the tension between business agility and security compliance, and the importance of having clear policies and processes to manage technology within an organization.

A company is concerned about the theft of client data from decommissioned laptops. Which of the following is the most cost-effective method to decrease this risk?

A. Wiping

B. Recycling

C. Shredding

D. Deletion

Explanation

The core concern is ensuring that sensitive client data cannot be recovered from storage drives (HDDs or SSDs) inside laptops that are being taken out of service ("decommissioned"). The solution must be both effective and the most cost-effective.

Why A. Wiping is the Correct Answer

Wiping (also known as sanitization or secure erasure) is a software-based process that overwrites all the data on a storage drive with meaningless patterns (e.g., all 0s, all 1s, or random characters).

Effectiveness:

A single overwrite pass is generally sufficient to make data unrecoverable for most threats, especially against common software-based recovery tools. For highly sensitive data, standards like NIST 800-88 may recommend multiple passes. This effectively renders the original client data unrecoverable.

Cost-Effectiveness:

This is the key differentiator. Wiping is performed using software. This means:

The physical laptop hardware (including the drive) remains intact and reusable. The company can resell the laptop or donate it, recouping some value and offsetting the cost of new equipment.

No specialized destruction equipment needs to be purchased.

The process can often be automated and performed in bulk on many laptops simultaneously, reducing labor costs.

It is significantly cheaper than physical destruction, while still providing a high level of security for this specific use case.

Why the Other Options Are Incorrect

B. Recycling

What it is:

Recycling involves sending electronic waste to a facility where materials are recovered and reused.

Why it's incorrect and risky:

Simply recycling a laptop without first sanitizing the drive is a major data breach risk. The recycling company or anyone who handles the laptop before it's dismantled could easily remove the drive and recover all the data. "Recycling" is not a data destruction method; it is a disposal method that must be preceded by a method like wiping or shredding.

C. Shredding

What it is:

Shredding is the physical destruction of the entire laptop or, more commonly, its storage drive using industrial machinery that tears it into small pieces.

Why it's not the most cost-effective:

Shredding is highly effective and is the best method for drives that have failed and cannot be wiped. However, it is not cost-effective in this scenario because:

It destroys the hardware, eliminating any potential resale value.

It often requires paying a third-party service to pick up and destroy the equipment, which incurs a per-item cost.

While extremely secure, it is overkill and unnecessarily expensive for functional drives when a software-based wiping solution is available and sufficient.

D. Deletion

What it is:

Deletion, whether dragging a file to the trash/recycle bin or even using a format command, does not remove the actual data from the drive.

Why it's incorrect and dangerous:

These operations typically only remove the pointers to where the data is stored on the disk. The data itself remains physically present until the sectors are overwritten by new data. Using simple file deletion or formatting is equivalent to throwing away a paper file by just removing its entry from a library's card catalog; the book is still on the shelf and easily found. This provides no security and is the worst option listed.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 4.1: Given a scenario, implement security awareness practices.

Part of security awareness is understanding proper data handling and disposal procedures. The process of securely decommissioning assets to prevent data remanence (the residual representation of data that has been deleted) is a critical practice. The exam expects you to know the various methods (e.g., wiping, shredding, degaussing) and their appropriate use cases.

A company is planning a disaster recovery site and needs to ensure that a single natural disaster would not result in the complete loss of regulated backup data. Which of the following should the company consider?

A. Geographic dispersion

B. Platform diversity

C. Hot site

D. Load balancing

Explanation

The core requirement is to protect regulated backup data from a "single natural disaster." This implies that the company's primary data center and its backup location could both be vulnerable if they are located close to each other (e.g., both in the same city or seismic zone). The goal is to create a strategy where the backup is immune to the same regional disaster that affects the primary site.

Why A. Geographic Dispersion is the Correct Answer

Geographic dispersion (or geographic diversity) is the practice of physically separating IT resources, such as data centers and backup vaults, across different geographic regions.

How it addresses the requirement:

By storing backup data at a site located a significant distance away from the primary site, the company ensures that a single natural disaster (e.g., hurricane, earthquake, flood, wildfire) is highly unlikely to impact both locations simultaneously. A hurricane striking the East Coast of the United States will not affect a backup facility on the West Coast or in a different country.

Regulatory Compliance:

The question specifically mentions "regulated backup data." Many regulations (e.g., financial, healthcare) explicitly require or strongly recommend geographic dispersion of backups as a control to ensure data availability and integrity in the face of a major disaster. This makes it not just a best practice, but often a compliance necessity.

Why the Other Options Are Incorrect

B. Platform diversity

What it is:

Platform diversity involves using different types of hardware, operating systems, or software vendors to avoid a single point of failure caused by a vendor-specific vulnerability or outage.

Why it's incorrect:

While valuable for mitigating risks like software zero-day exploits or hardware failures, platform diversity does not protect against a large-scale physical threat like a natural disaster. If both the primary and backup systems are in the same geographic area, a flood will destroy them regardless of whether one runs on Windows and the other on Linux.

C. Hot site

What it is:

A hot site is a fully operational, ready-to-use disaster recovery facility that has all the necessary hardware, software, and network connectivity to quickly take over operations. It is a type of recovery site, not a strategy for protecting the backups themselves.

Why it's incorrect:

The question is about preventing the loss of backup data. A hot site is a consumer of backups; it needs recent backups to restore services. If the backup data itself is stored at or near the hot site, and that site is hit by the same disaster, the data is lost. The company must first use geographic dispersion to protect the backup data, and then they can decide to use a hot site (which should also be geographically dispersed) for recovery.

D. Load balancing

What it is:

Load balancing distributes network traffic or application requests across multiple servers to optimize resource use, maximize throughput, and prevent any single server from becoming a bottleneck.

Why it's incorrect:

Load balancing is a technique for enhancing performance and availability during normal operations. It is not a disaster recovery or data protection mechanism. It does nothing to protect the actual data from loss in a disaster. If the data center is destroyed, the load balancer and all the servers behind it are gone.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 4.4: Explain the importance of disaster recovery and business continuity concepts and exercises.

Specifically, it tests knowledge of Recovery Sites and the strategies that make them effective. A core principle of disaster recovery planning is ensuring that recovery sites are geographically distant from primary operations to mitigate the risk of large-scale regional disasters. This is a fundamental concept in ensuring business continuity.

A company would like to provide employees with computers that do not have access to the internet in order to prevent information from being leaked to an online forum. Which of the following would be best for the systems administrator to implement?

A. Air gap

B. Jump server

C. Logical segmentation

D. Virtualization

Explanation

The company's goal is absolute: to ensure certain computers "do not have access to the internet" to prevent data exfiltration to online forums. This requires a solution that creates a total, physical barrier to external networks.

Why A. Air Gap is the Correct Answer

An air gap is a security measure that involves physically isolating a computer or network from any other network that could pose a threat, especially from unsecured networks like the internet.

How it works:

An air-gapped system has no physical wired connections (like Ethernet cables) and no wireless interfaces (like Wi-Fi or Bluetooth cards) enabled or installed. It is a standalone system with no network connectivity whatsoever.

Meeting the Requirement:

This is the only method that 100% guarantees the computer cannot access the internet. Since there is no path for data to travel to an external network, it is physically impossible for information to be leaked directly from that machine to an online forum. Data can only be transferred via physical media (e.g., USB drives), which can be controlled with strict policies.

Why the Other Options Are Incorrect

B. Jump server

What it is:

A jump server (or bastion host) is a heavily fortified computer that provides a single, controlled point of access into a secure network segment from a less secure network (like the internet). Administrators first connect to the jump server and then "jump" from it to other internal systems.

Why it's incorrect:

A jump server is itself connected to the internet. Its purpose is to manage access to segregated networks, not to prevent internet access. The computers behind the jump server would still have a network path to the internet, even if it's controlled. This does not meet the requirement of having "no access."

C. Logical segmentation

What it is:

Logical segmentation (often achieved through VLANs or firewall rules) involves dividing a network into smaller subnetworks to control traffic flow and improve security. It restricts communication between segments.

Why it's incorrect:

Logical segmentation is a software-based control. It relies on configurations that can be changed, misconfigured, or bypassed through hacking techniques like VLAN hopping. While it can be used to block internet access with a firewall rule, it does not physically remove the capability. The network interface is still active and presents a potential attack surface. It does not provide the absolute, physical guarantee required by the scenario.

D. Virtualization

What it is:

Virtualization involves creating a software-based (virtual) version of a computer, server, storage device, or network resource.

Why it's incorrect:

A virtual machine (VM) is entirely dependent on the host system's network configuration. If the host computer has internet access, the VM can also be given internet access through virtual networking. Virtualization is a method of organizing compute resources, not a method of enforcing network isolation. An administrator could easily create a VM with no internet access, but that would be achieved through logical segmentation (virtual network settings), not virtualization itself.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 3.3: Given a scenario, implement secure network designs.

Part of secure network design is understanding isolation techniques. Network Segmentation (logical) and Air Gapping (physical) are key concepts. The exam requires you to know the difference and apply the correct one based on the security requirement. An air gap is the most secure form of isolation and is used for highly sensitive systems (e.g., military networks, industrial control systems, critical infrastructure).

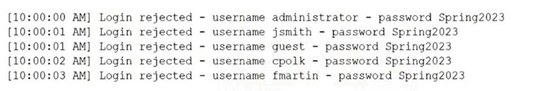

A security analyst is reviewing the following logs:

Which of the following attacks is most likely occurring?

A. Password spraying

B. Account forgery

C. Pass-t he-hash

D. Brute-force

Explanation

To identify the attack, we must analyze the pattern of the login attempts shown in the log:

Multiple Usernames:

The attacker is trying many different usernames (administrator, jsmith, guest, cpoik, frnartin).

Single Password:

Every single login attempt is using the exact same password: Spring2023.

Rapid Pace:

The attempts are happening in rapid succession, one per second.

This pattern is the definitive signature of a password spraying attack.

Why A. Password Spraying is the Correct Answer

Password spraying is a type of brute-force attack that avoids account lockouts by using a single common password against a large number of usernames before moving on to try a second common password.

How it works:

Instead of trying many passwords against one username (which quickly triggers lockout policies), the attacker takes one password (e.g., Spring2023, Password1, Welcome123) and "sprays" it across a long list of usernames. This technique flies under the radar of lockout policies that are triggered by excessive failed attempts on a single account.

Matching the Log:

The log shows the attacker using one password (Spring2023) against five different user accounts in just a few seconds. This is a classic example of a password spray in progress.

Why the Other Options Are Incorrect

B. Account forgery

What it is:

This is not a standard term for a specific attack technique. It could loosely refer to creating a fake user account or spoofing an identity, but it does not describe a pattern of automated authentication attempts. It is a distractor.

C. Pass-the-hash

What it is:

Pass-the-hash is a lateral movement technique used after an initial compromise. An attacker who has already compromised a system steals the hashed version of a user's password from memory. They then use that hash to authenticate to other systems on the network without needing to crack the plaintext password.

Why it's incorrect:

This attack does not involve repeated login attempts with a plaintext password like Spring2023. It uses stolen cryptographic hashes and would not generate a log of "Login rejected - username - password" events.

D. Brute-force

What it is:

A brute-force attack is a general term for trying all possible combinations of characters to guess a password.

Why it's mostly incorrect:

While password spraying is technically a type of brute-force attack, it is a very specific subset. In standard security terminology, a "brute-force attack" typically implies trying many passwords against a single username (e.g., trying password1, password2, password3... against jsmith).

The Key Difference:

The pattern in the log shows one password against many usernames, which is the definition of spraying. A traditional brute-force would show many passwords against one username. Therefore, Password spraying (A) is the most accurate and specific description of the attack pattern observed.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 1.4: Given a scenario, analyze potential indicators to determine the type of attack.

Specifically, it tests your ability to analyze authentication logs to identify the TTP (Tactic, Technique, and Procedure) of an attacker. Recognizing the pattern of a password spraying attack is a critical skill for a security analyst, as it requires a different mitigation strategy (e.g., banning common passwords, monitoring for single passwords used across multiple accounts) than a traditional brute-force attack (which is best mitigated by account lockout policies).

A systems administrator would like to deploy a change to a production system. Which of the following must the administrator submit to demonstrate that the system can be restored to a working state in the event of a performance issue?

A. Backout plan

B. Impact analysis

C. Test procedure

D. Approval procedure

Explanation

The question centers on a key principle of change management: ensuring that any modification to a critical system can be safely reversed if it causes unexpected problems, such as performance degradation or system failure.

Why A. Backout Plan is the Correct Answer

A backout plan (also known as a rollback plan) is a predefined set of steps designed to restore a system to its previous, known-good configuration if a change fails or causes unacceptable disruption.

Purpose:

Its sole purpose is to provide a clear, documented path to reverse the change and return the system to a fully functional state as quickly as possible. This minimizes downtime and business impact.

Directly Addressing the Requirement:

The question asks for what demonstrates the system "can be restored to a working state in the event of a performance issue." A backout plan is explicitly this document. It details:

The specific steps to undo the change (e.g., run a specific script, restore from a backup, reboot with a previous configuration)

The personnel responsible for executing the rollback.

The conditions that trigger the backout procedure (e.g., "if CPU usage remains above 95% for more than 15 minutes").

The estimated time to complete the rollback.

Submitting a backout plan as part of a change request provides the Change Advisory Board (CAB) with the confidence that the administrator has considered the risks and has a concrete strategy for mitigation.

Why the Other Options Are Incorrect

B. Impact analysis

What it is:

An impact analysis is a document that assesses the potential consequences of the proposed change. It identifies which systems, services, and business processes might be affected, and to what degree (e.g., "This change will cause a 5-minute outage for the billing department").

Why it's incorrect:

While an impact analysis is a critical component of a change request, it does not, by itself, demonstrate how to fix a problem. It explains what might break, but not how to restore service. The backout plan is the actionable procedure that follows from a well-done impact analysis.

C. Test procedure

What it is:

A test procedure outlines the steps that will be taken to validate that the change works correctly before it is deployed to production. This is often done in a staging or development environment that mimics production.

Why it's incorrect:

The test procedure is about proving the change works as intended. The question, however, is about what to do when it doesn't work. The test procedure helps reduce the likelihood of needing a backout plan but does not serve as the plan to restore the system if the change fails.

D. Approval procedure

What it is:

The approval procedure is the workflow that a change request must follow to be authorized. It defines who has the authority to approve different types of changes (e.g., a major change may require CIO approval, while a minor one may only need a team lead's approval).

Why it's incorrect:

This is the process for getting permission to make the change. It is unrelated to the technical steps required to restore the system to a working state if the change causes an issue.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.5: Explain the importance of change management processes and controls.

A formal change management process is a key operational security control. The backout plan is a mandatory element of any well-structured change request for a production system. It is a fundamental risk mitigation tool that ensures the organization can maintain availability (a core tenet of the CIA triad) even when implementing necessary changes.

| Page 4 out of 72 Pages |